Wavelet Transform and Attentional Dual-Path EEG Model for Virtual Reality Motion Sickness Detection

-

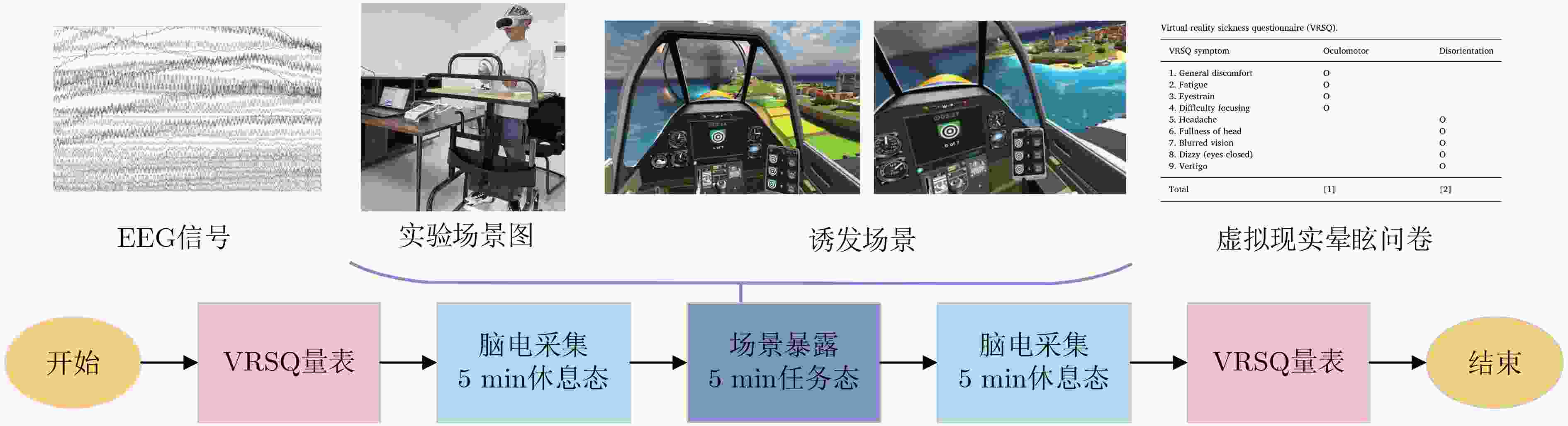

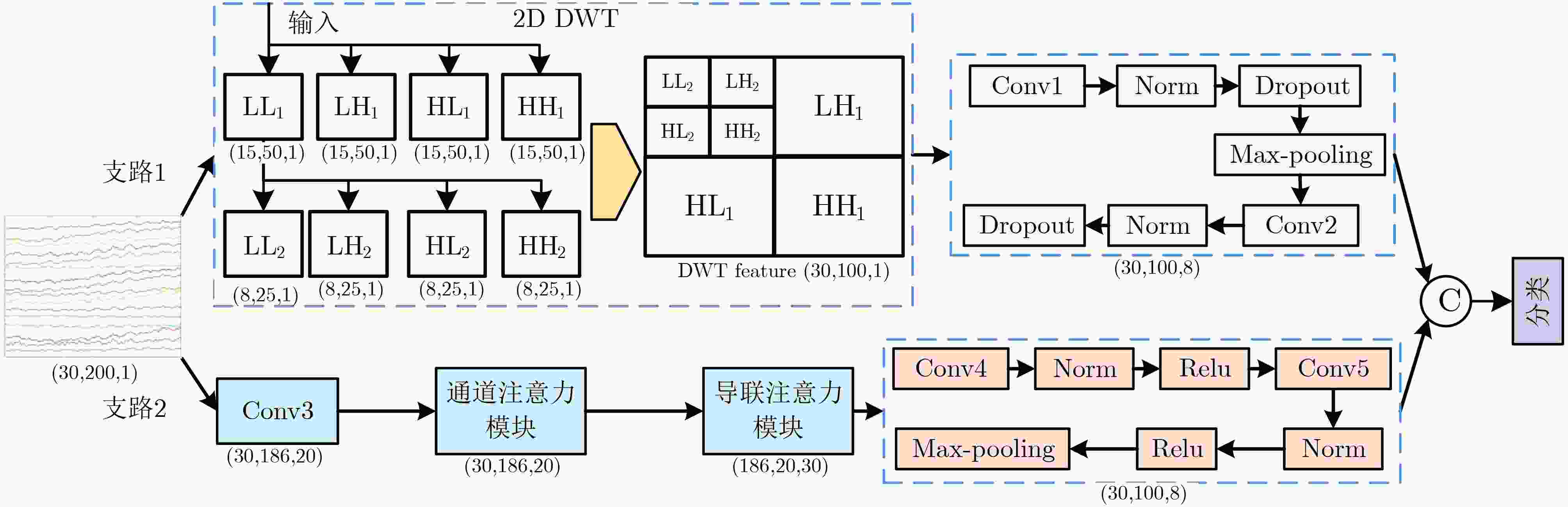

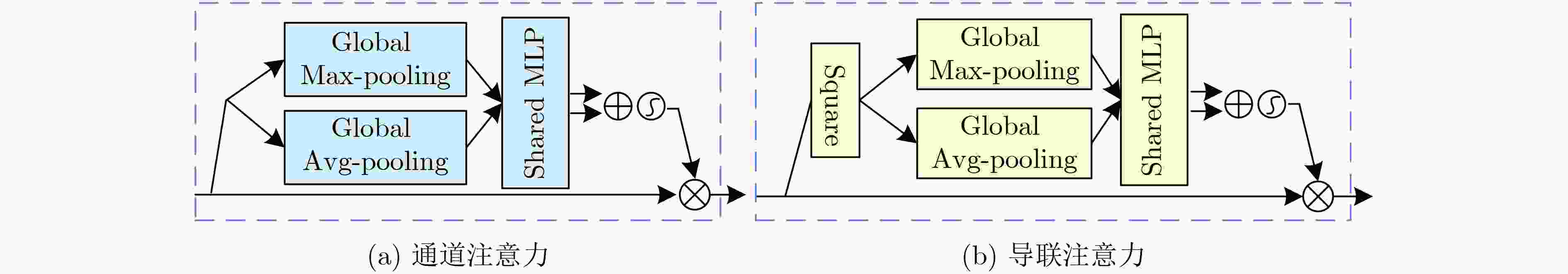

摘要: 虚拟现实晕动症(VRMS)指在虚拟现实(VR)环境中用户因前庭-视觉信息失调引发的严重眩晕,该症状阻碍沉浸式VR技术应用和推广。该文提出一种小波变换与注意力机制协同的双路融合模型(WTATNet),通过解耦VR运动刺激暴露后的休息态脑电(EEG)的时空特征,为VRMS的客观检测提供新方法。该模型分为两条支路,支路1对EEG时间维和导联维进行二维离散小波变换计算出小波系数,再将小波系数送入卷积层进行特征提取。支路2则是EEG经过一维卷积层滤波后,依次利用通道注意力模块和导联注意力模块强化通道维和导联维的关键特征。最后将两支路的特征融合并进行分类。该文使用VR游戏《超级滑翔翼2》诱发受试者的VRMS并记录他们在任务前后的休息态EEG评估WTATNet模型的性能,最终实现对受试者休息态下眩晕脑电与非眩晕脑电的分类。该模型对VRMS的识别准确率、F1-score、精确率和召回率(十折交叉验证的平均值)分别为98.39%, 98.39%, 98.38%和98.40%,优于目前的先进的EEG识别模型。结果表明所提方法可对VRMS进行检测,并用于进一步研究VRMS的产生因素和治理方法,对优化VR系统具有一定的指导意义。Abstract:

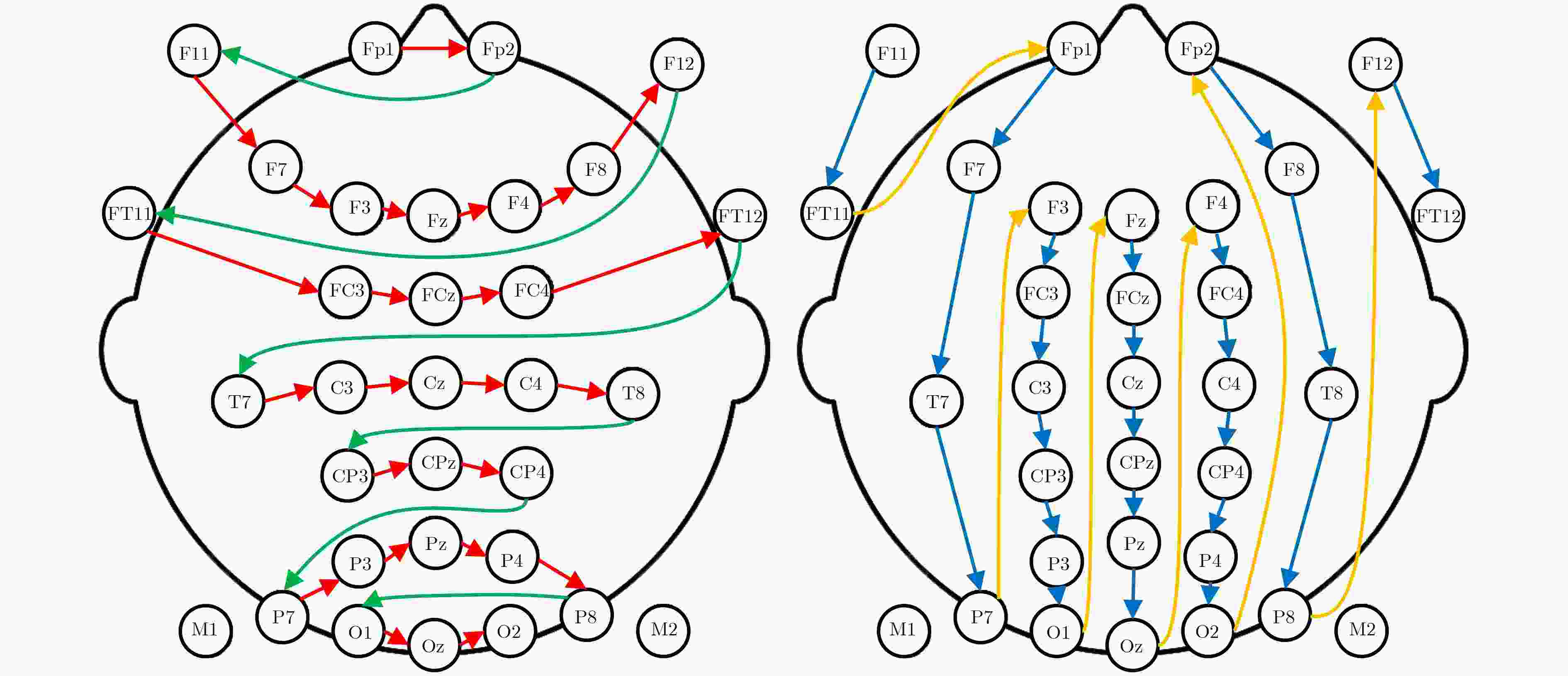

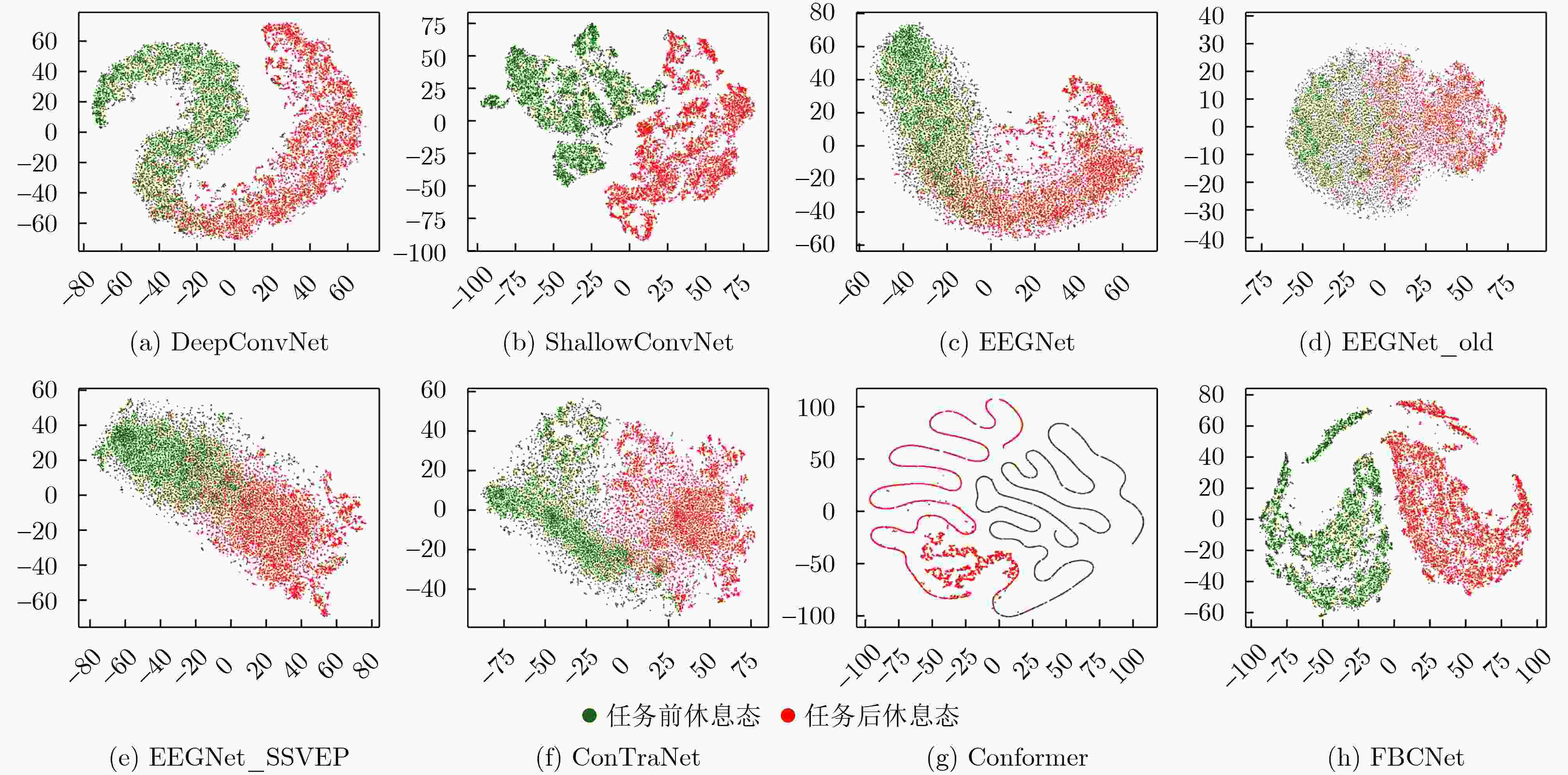

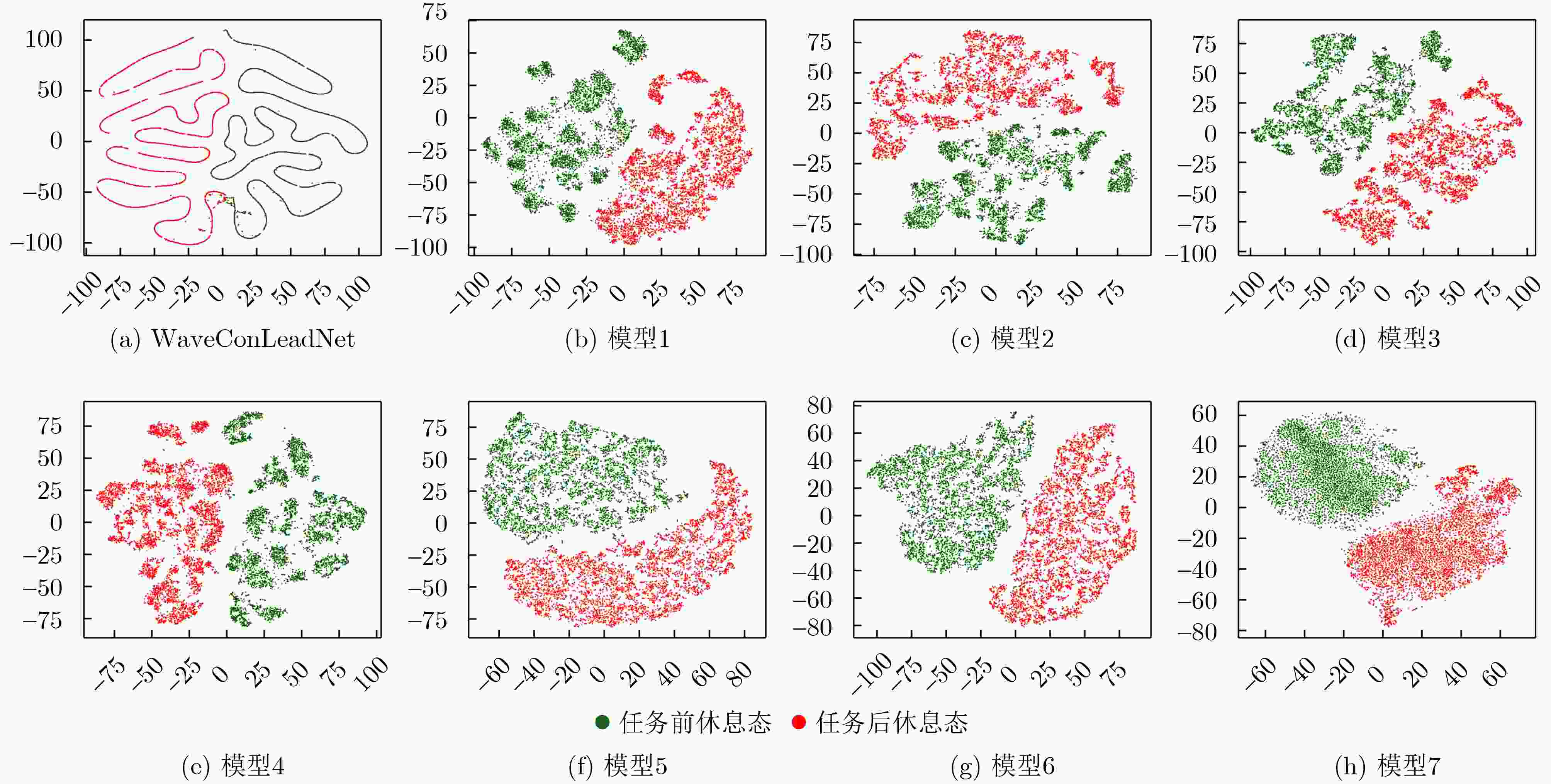

Objective Virtual Reality Motion Sickness (VRMS) presents a barrier to the wider adoption of immersive Virtual Reality (VR). It is primarily caused by sensory conflict between the vestibular and visual systems. Existing assessments rely on subjective reports that disrupt immersion and do not provide real-time measurements. An objective detection method is therefore needed. This study proposes a dual-path fusion model, the Wavelet Transform ATtentional Network (WTATNet), which integrates wavelet transform and attention mechanisms. WTATNet is designed to classify resting-state ElectroEncephaloGraph (EEG) signals collected before and after VR motion stimulus exposure to support VRMS detection and research on the mechanisms and mitigation strategies. Methods WTATNet contains two parallel pathways for EEG feature extraction. The first applies a Two-Dimensional Discrete Wavelet Transform (2D-DWT) to both the time and electrode dimensions of the EEG, reshaping the signal into a two-dimensional matrix based on the spatial layout of the scalp electrodes in horizontal or vertical form. This decomposition captures multi-scale spatiotemporal features, which are then processed using Convolutional Neural Network (CNN) layers. The second pathway applies a one-dimensional CNN for initial filtering followed by a dual-attention structure consisting of a channel attention module and an electrode attention module. These modules recalibrate the importance of features across channels and electrodes to emphasize task-relevant information. Features from both pathways are fused and passed through fully connected layers to classify EEGs into pre-exposure (non-VRMS) and post-exposure (VRMS) states based on subjective questionnaire validation. EEG data were collected from 22 subjects exposed to VRMS using the game “Ultrawings2.” Ten-fold cross-validation was used for training and evaluation with accuracy, precision, recall, and F1-score as metrics. Results and Discussions WTATNet achieved high VRMS-related EEG classification performance, with an average accuracy of 98.39%, F1-score of 98.39%, precision of 98.38%, and recall of 98.40%. It outperformed classical and state-of-the-art EEG models, including ShallowConvNet, EEGNet, Conformer, and FBCNet ( Table 2 ). Ablation experiments (Tables 3 and4 ) showed that removing the wavelet transform path, the electrode attention module, or the channel attention module reduced accuracy by 1.78%, 1.36%, and 1.01%, respectively. The 2D-DWT performed better than the one-dimensional DWT, supporting the value of joint spatiotemporal analysis. Experiments with randomized electrode ordering (Table 5 ) produced lower accuracy than spatially coherent layouts, indicating that 2D-DWT leverages inherent spatial correlations among electrodes. Feature visualizations using t-SNE (Figures 5 and6 ) showed that WTATNet produced more discriminative features than baseline and ablated variants.Conclusions The dual-path WTATNet model integrates wavelet transform and attention mechanisms to achieve accurate VRMS detection using resting-state EEG. Its design combines interpretable, multi-scale spatiotemporal features from 2D-DWT with adaptive channel-level and electrode-level weighting. The experimental results confirm state-of-the-art performance and show that WTATNet offers an objective, robust, and non-intrusive VRMS detection method. It provides a technical foundation for studies on VRMS neural mechanisms and countermeasure development. WTATNet also shows potential for generalization to other EEG decoding tasks in neuroscience and clinical research. -

表 1 一维导联排序方法

方法 导联顺序 横向 [Fp1, Fp2, F11, F7, F3, Fz, F4, F8, F12, FT11, FC3, FCz, FC4, FT12, T7, C3, Cz, C4, T8, CP3, CPz, CP4, P7, P3,

Pz, P4, P8, O1, Oz, O2]竖向 [F11, FT11, Fp1, F7, T7, P7, F3, FC3, C3, CP3, P3, O1, Fz, FCz, Cz, CPz, Pz, Oz, F4, FC4, C4, CP4, P4, O2, Fp2,

F8, T8, P8, F12, FT12]随机1 [O1, C3, P3, C4, F12, FT11, Oz, Pz, FC4, Fp1, F3, Cz, Fz, FT12, FCz, P7, Fp2, F11, P4, F7, CP4, P8, T8, O2, CPz,

F8, FC3, T7, CP3, F4]随机2 [F8, O2, Fz, P8, F12, O1, FC4, CP4, FCz, P3, T8, F3, F7, Pz, Cz, T7, CPz, FT11, C3, P4, Fp1, Fp2, P7, FC3,

CP3, C4, F4, Oz, F11, FT12]随机3 [C4, FC4, P7, Fp2, F4, P8, Fp1, P3, FT11, Cz, F3, F11, T8, CP3, F7F12, O2, F8, Oz, CP4, FT12, CPz,

Pz, T7, C3, FC3, P4, FCz, Fz, O1]表 2 对照实验结果(平均值±标准差)(%)

模型/指标 accuracy F1 precision recall WTATNet(本文) 98.39±0.56 98.39±0.53 98.38±0.53 98.40±0.52 DeepConvNet 67.49±6.03 64.00±8.13 68.12±5.88 78.98±2.73 ShallowConvNet 95.55±4.03 95.49±4.13 95.44±4.20 96.14±3.16 EEGNet 72.40±4.45 70.76±5.32 72.91±4.08 79.56±2.34 EEGNet_old 67.78±3.73 65.04±4.98 68.32±3.58 76.79±2.08 EEGNet_SSVEP 79.25±4.59 78.58±4.92 79.59±4.42 83.68±2.91 ConTraNet[33] 84.97±6.05 84.66±6.57 85.09±6.03 96.82±3.75 Conformer[34] 93.34±2.28 93.32±2.29 93.42±2.24 93.84±1.86 FBCNet[35] 98.04±0.92 98.03±0.93 98.02±0.95 98.08±0.82 表 3 电极横向排序下模型消融实验结果(平均值±标准差)(%)

模型/指标 横向排序 竖向排序 accuracy F1 precision recall accuracy F1 precision recall WTATNet 98.39±0.56 98.39±0.53 98.38±0.53 98.40±0.52 97.97±0.50 97.97±0.50 97.97±0.50 97.98±0.49 模型1 96.61±0.55 96.60±0.55 96.59±0.56 96.64±0.54 96.12±0.74 96.11±0.73 96.10±0.73 96.16±0.74 模型2 97.03±0.60 97.02±0.61 96.99±0.63 97.15±0.54 96.91±0.54 96.90±0.54 96.86±0.55 97.03±0.51 模型3 97.38±0.33 97.38±0.33 97.36±0.32 97.41±0.35 94.54±5.37 94.46±5.55 94.57±5.22 95.28±3.82 模型4 96.73±0.61 96.72±0.61 96.69±0.62 96.81±0.59 95.66±0.57 95.65±0.58 95.60±0.61 95.81±0.47 模型5 96.57±0.47 96.57±0.47 96.57±0.47 96.58±0.47 96.91±0.68 96.91±0.68 96.89±0.68 96.95±0.67 模型6 97.15±0.64 97.14±0.64 97.13±0.65 97.18±0.62 97.20±0.46 97.20±0.46 97.19±0.46 97.24±0.44 模型7 96.81±0.34 96.80±0.34 96.78±0.35 96.86±0.32 97.05±0.42 97.05±0.42 97.02±0.42 97.12±0.43 模型8 96.61±0.73 96.61±0.73 96.59±0.74 96.65±0.73 / / / / 表 4 不同导联重排方法下WTATNet结果(平均值±标准差)(%)

排序/指标 accuracy F1 precision recall 横向 98.39±0.56 98.39±0.53 98.38±0.53 98.40±0.52 竖向 97.97±0.50 97.97±0.50 97.97±0.50 97.98±0.49 随机1 96.30±0.68 96.29±0.69 96.29±0.66 96.36±0.73 随机2 97.09±0.39 97.08±0.39 97.07±0.38 97.13±0.39 随机3 96.95±0.64 96.94±0.64 96.92±0.63 96.99±0.65 -

[1] LIU Ran, XU Miao, ZHANG Yanzhen, et al. A pilot study on electroencephalogram-based evaluation of visually induced motion sickness[J]. Journal of Imaging Science and Technology, 2020, 64(2): 020501. doi: 10.2352/J.ImagingSci.Technol.2020.64.2.020501. [2] LIANG Tie, HONG Lei, XIAO Jinzhuang, et al. Directed network analysis reveals changes in cortical and muscular connectivity caused by different standing balance tasks[J]. Journal of Neural Engineering, 2022, 19(4): 046021. doi: 10.1088/1741-2552/ac7d0c. [3] KENNEDY R S, DREXLER J, and KENNEDY R C. Research in visually induced motion sickness[J]. Applied Ergonomics, 2010, 41(4): 494–503. doi: 10.1016/j.apergo.2009.11.006. [4] 顾展滔, 丁玎, 陈亦婷, 等. 面向虚拟现实晕动症的评估与缓解方法[J]. 浙江大学学报: 理学版, 2025, 52(1): 30–37. doi: 10.3785/j.issn.1008-9497.2025.01.004.GU Zhantao, DING Ding, CHEN Yiting, et al. Evaluation and mitigation methods for virtual reality motion sickness[J]. Journal of Zhejiang University: Science Edition, 2025, 52(1): 30–37. doi: 10.3785/j.issn.1008-9497.2025.01.004. [5] GOLDING J F. Motion sickness susceptibility[J]. Autonomic Neuroscience, 2006, 129(1/2): 67–76. doi: 10.1016/j.autneu.2006.07.019. [6] 蔡力, 翁冬冬, 张振亮, 等. 虚实运动一致性对虚拟现实晕动症的影响[J]. 系统仿真学报, 2016, 28(9): 1950–1956. doi: 10.16182/j.cnki.joss.2016.09.004.CAI Li, WENG Dongdong, ZHANG Zhenliang, et al. Impact of consistency between visually perceived movement and real movement on cybersickness[J]. Journal of System Simulation, 2016, 28(9): 1950–1956. doi: 10.16182/j.cnki.joss.2016.09.004. [7] MITTELSTAEDT J M. Individual predictors of the susceptibility for motion-related sickness: A systematic review[J]. Journal of Vestibular Research, 2020, 30(3): 165–193. doi: 10.3233/ves-200702. [8] 徐子超, 张玲, 肖水凤, 等. 晕动症机制及防治技术研究进展与展望[J]. 海军军医大学学报, 2024, 45(8): 923–928. doi: 10.16781/j.CN31-2187/R.20240056.XU Zichao, ZHANG Ling, XIAO Shuifeng, et al. Motion sickness mechanism and control techniques: Research progress and prospect[J]. Academic Journal of Naval Medical University, 2024, 45(8): 923–928. doi: 10.16781/j.CN31-2187/R.20240056. [9] 蔡永青, 韩成, 权巍, 等. 基于注意力机制的视觉诱导晕动症评估模型[J]. 浙江大学学报: 工学版, 2025, 59(6): 1110–1118. doi: 10.3785/j.issn.1008-973X.2025.06.002.CAI Yongqing, HAN Cheng, QUAN Wei, et al. Visual induced motion sickness estimation model based on attention mechanism[J]. Journal of Zhejiang University: Engineering Science, 2025, 59(6): 1110–1118. doi: 10.3785/j.issn.1008-973X.2025.06.002. [10] FENG Naishi, ZHOU Bin, ZHANG Qianqian, et al. A comprehensive exploration of motion sickness process analysis from EEG signal and virtual reality[J]. Computer Methods and Programs in Biomedicine, 2025, 264: 108714. doi: 10.1016/j.cmpb.2025.108714. [11] CAI Mengpu, CHEN Junxiang, HUA Chengcheng, et al. EEG emotion recognition using EEG-SWTNS neural network through EEG spectral image[J]. Information Sciences, 2024, 680: 121198. doi: 10.1016/j.ins.2024.121198. [12] CHAUDARY E, KHAN S A, and MUMTAZ W. EEG-CNN-souping: Interpretable emotion recognition from EEG signals using EEG-CNN-souping model and explainable AI[J]. Computers and Electrical Engineering, 2025, 123: 110189. doi: 10.1016/j.compeleceng.2025.110189. [13] MEISER A, LENA KNOLL A, and BLEICHNER M G. High-density ear-EEG for understanding ear-centered EEG[J]. Journal of Neural Engineering, 2024, 21(1): 016001. doi: 10.1088/1741-2552/ad1783. [14] LIAO C Y, TAI S K, CHEN R C, et al. Using EEG and deep learning to predict motion sickness under wearing a virtual reality device[J]. IEEE Access, 2020, 8: 126784–126796. doi: 10.1109/access.2020.3008165. [15] 韩敏, 孙磊磊, 洪晓军. 基于回声状态网络的脑电信号特征提取[J]. 生物医学工程学杂志, 2012, 29(2): 206–211.HAN Min, SUN Leilei, and HONG Xiaojun. Extraction of the EEG signal feature based on echo state networks[J]. Journal of Biomedical Engineering, 2012, 29(2): 206–211. [16] 张成, 汤璇, 杨冬平, 等. 基于深度学习的脑电信号特征检测方法[J]. 电子设计工程, 2024, 32(15): 156–160. doi: 10.14022/j.issn1674-6236.2024.15.033.ZHANG Cheng, TANG Xuan, YANG Dongping, et al. Method for EEG signal feature detection based on deep learning[J]. Electronic Design Engineering, 2024, 32(15): 156–160. doi: 10.14022/j.issn1674-6236.2024.15.033. [17] DAS A, SINGH S, KIM J, et al. Enhanced EEG signal classification in brain computer interfaces using hybrid deep learning models[J]. Scientific Reports, 2025, 15(1): 27161. doi: 10.1038/s41598-025-07427-2. [18] RAPARTHI M, MITTA N R, DUNKA V K, et al. Deep learning model for patient emotion recognition using EEG-tNIRS data[J]. Neuroscience Informatics, 2025, 5(3): 100219. doi: 10.1016/j.neuri.2025.100219. [19] GUO Xiang, LIANG Ruiqi, XU Shule, et al. An investigation of echo state network for EEG-based emotion recognition with deep neural networks[J]. Biomedical Signal Processing and Control, 2026, 111: 108342. doi: 10.1016/j.bspc.2025.108342. [20] CHEN He, SONG Yan, and LI Xiaoli. A deep learning framework for identifying children with ADHD using an EEG-based brain network[J]. Neurocomputing, 2019, 356: 83–96. doi: 10.1016/j.neucom.2019.04.058. [21] WANG Teng, HUANG Xiaoqiao, XIAO Zenan, et al. EEG emotion recognition based on differential entropy feature matrix through 2D-CNN-LSTM network[J]. EURASIP Journal on Advances in Signal Processing, 2024, 2024(1): 49. doi: 10.1186/s13634-024-01146-y. [22] 王春丽, 李金絮, 高玉鑫, 等. 一种基于时空频多维特征的短时窗口脑电听觉注意解码网络[J]. 电子与信息学报, 2025, 47(3): 814–824. doi: 10.11999/JEIT240867.WANG Chunli, LI Jinxu, GAO Yuxin, et al. A short-time window electroencephalogram auditory attention decoding network based on multi-dimensional characteristics of temporal-spatial-frequency[J]. Journal of Electronics & Information Technology, 2025, 47(3): 814–824. doi: 10.11999/JEIT240867. [23] DHONGADE D, CAPTAIN K, and DAHIYA S. EEG-based schizophrenia detection: Integrating discrete wavelet transform and deep learning[J]. Cognitive Neurodynamics, 2025, 19(1): 62. doi: 10.1007/s11571-025-10248-8. [24] MINHAS R, PEKER N Y, HAKKOZ M A, et al. Improved drowsiness detection in drivers through optimum pairing of EEG features using an optimal EEG channel comparable to a multichannel EEG system[J]. Medical & Biological Engineering & Computing, 2025, 63(10): 3019–3036. doi: 10.1007/s11517-025-03375-1. [25] SHEN Mingkan, WEN Peng, SONG Bo, et al. An EEG based real-time epilepsy seizure detection approach using discrete wavelet transform and machine learning methods[J]. Biomedical Signal Processing and Control, 2022, 77: 103820. doi: 10.1016/j.bspc.2022.103820. [26] WEN Dong, JIAO Wenlong, LI Xiaoling, et al. The EEG signals steganography based on wavelet packet transform-singular value decomposition-logistic[J]. Information Sciences, 2024, 679: 121006. doi: 10.1016/j.ins.2024.121006. [27] QIN Yuxin, LI Baojiang, WANG Wenlong, et al. ETCNet: An EEG-based motor imagery classification model combining efficient channel attention and temporal convolutional network[J]. Brain Research, 2024, 1823: 148673. doi: 10.1016/j.brainres.2023.148673. [28] ZHOU Kai, HAIMUDULA A, and TANG Wanying. Dual-branch convolution network with efficient channel attention for EEG-based motor imagery classification[J]. IEEE Access, 2024, 12: 74930–74943. doi: 10.1109/access.2024.3404634. [29] KIEU H D. Graph attention network for motor imagery classification[C]. 2024 RIVF International Conference on Computing and Communication Technologies (RIVF), Danang, Vietnam, 2024: 255–260. doi: 10.1109/RIVF64335.2024.11009062. [30] LENG Jiancai, GAO Licai, JIANG Xiuquan, et al. A multi‐feature fusion graph attention network for decoding motor imagery intention in spinal cord injury patients[J]. Journal of Neural Engineering, 2024, 21(6): 066044. doi: 10.1088/1741-2552/ad9403. [31] 化成城, 周占峰, 陶建龙, 等. 导联注意力及脑连接驱动的虚拟现实晕动症识别模型研究[J]. 电子与信息学报, 2025, 47(4): 1161–1171. doi: 10.11999/JEIT240440.HUA Chengcheng, ZHOU Zhanfeng, TAO Jianlong, et al. Virtual reality motion sickness recognition model driven by lead-attention and brain connection[J]. Journal of Electronics & Information Technology, 2025, 47(4): 1161–1171. doi: 10.11999/JEIT240440. [32] ALI O, SAIF-UR-REHMAN M, GLASMACHERS T, et al. ConTraNet: A hybrid network for improving the classification of EEG and EMG signals with limited training data[J]. Computers in Biology and Medicine, 2024, 168: 107649. doi: 10.1016/j.compbiomed.2023.107649. [33] SONG Yonghao, ZHENG Qingqing, LIU Bingchuan, et al. EEG conformer: Convolutional transformer for EEG decoding and visualization[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2023, 31: 710–719. doi: 10.1109/tnsre.2022.3230250. [34] MANE R, CHEW E, CHUA K, et al. FBCNet: A multi-view convolutional neural network for brain-computer interface[J]. arXiv preprint arXiv: 2104.01233, 2021. doi: 10.48550/arXiv.2104.01233. [35] PAN Junting, SAYROL E, GIRO-I-NIETO X, et al. Shallow and deep convolutional networks for saliency prediction[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 598–606. doi: 10.1109/CVPR.2016.71. [36] 林艳飞, 臧博宇, 郭嵘骁, 等. 基于相频特性的稳态视觉诱发电位深度学习分类模型[J]. 电子与信息学报, 2022, 44(2): 446–454. doi: 10.11999/JEIT210816.LIN Yanfei, ZANG Boyu, GUO Rongxiao, et al. A deep learning method for SSVEP classification based on phase and frequency characteristics[J]. Journal of Electronics & Information Technology, 2022, 44(2): 446–454. doi: 10.11999/JEIT210816. [37] 熊鹏, 刘学朋, 杜海曼, 等. 基于平稳和连续小波变换融合算法的心电信号P, T波检测[J]. 电子与信息学报, 2021, 43(5): 1441–1447. doi: 10.11999/JEIT200049.XIONG Peng, LIU Xuepeng, DU Haiman, et al. Detection of ECG signal P and T wave based on stationary and continuous wavelet transform fusion[J]. Journal of Electronics & Information Technology, 2021, 43(5): 1441–1447. doi: 10.11999/JEIT200049. -

下载:

下载:

下载:

下载: