Continuous Federation of Noise-resistant Heterogeneous Medical Dialogue Using the Trustworthiness-based Evaluation

-

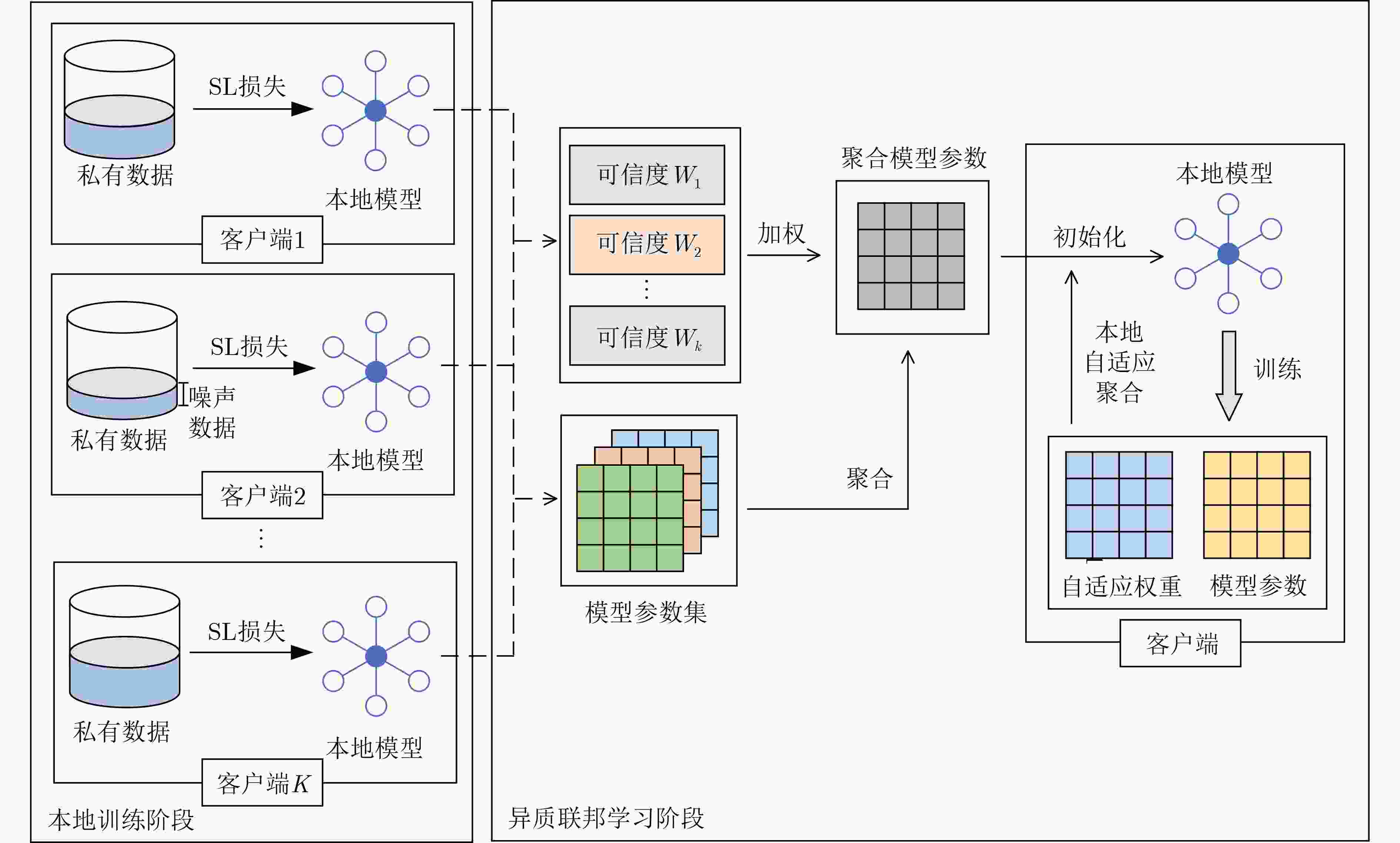

摘要: 针对异质和噪声文本,该文通过改进目标函数,聚合方式,本地更新方式等综合考虑,提出基于可信度评估的抗噪异质医疗对话联邦,增强了医疗对话联邦学习的鲁棒性。将模型训练划分为本地训练阶段和异质联邦学习阶段。在本地训练阶段,通过对称交叉熵损失缓解噪声文本问题,防止本地模型在噪声文本上过拟合。在异质联邦学习阶段,通过度量客户端文本质量进行自适应聚合模型以考虑干净,噪声(随机/非随机文本语法和语义)和异质文本。同时在本地参数更新时考虑局部和全局参数以持续自适应的更新参数,可以进一步提高抗噪和异质鲁棒性。实验结果显示,该方法在噪声和异质联邦学习场景下相比其他方法有显著提升。Abstract:

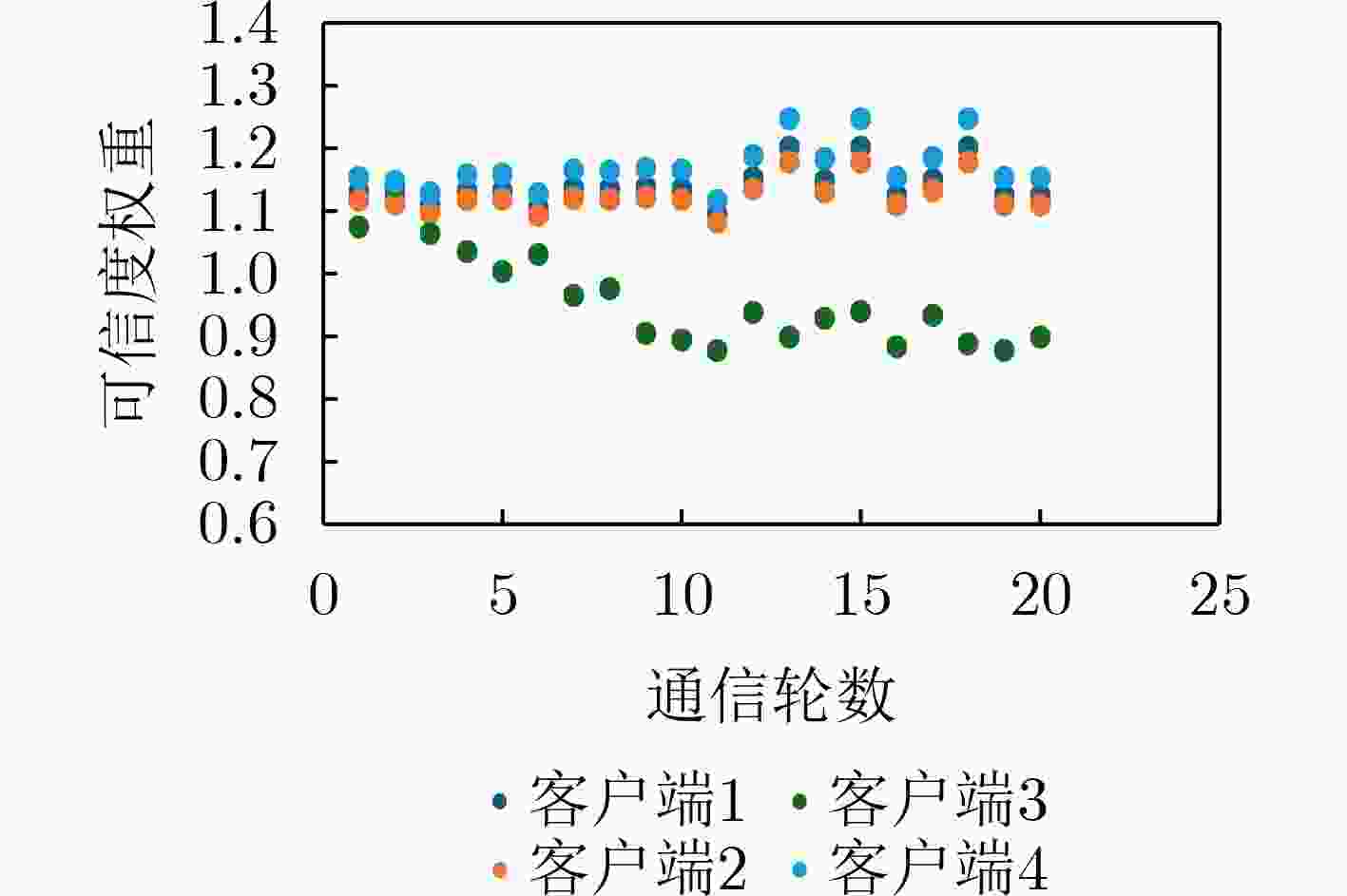

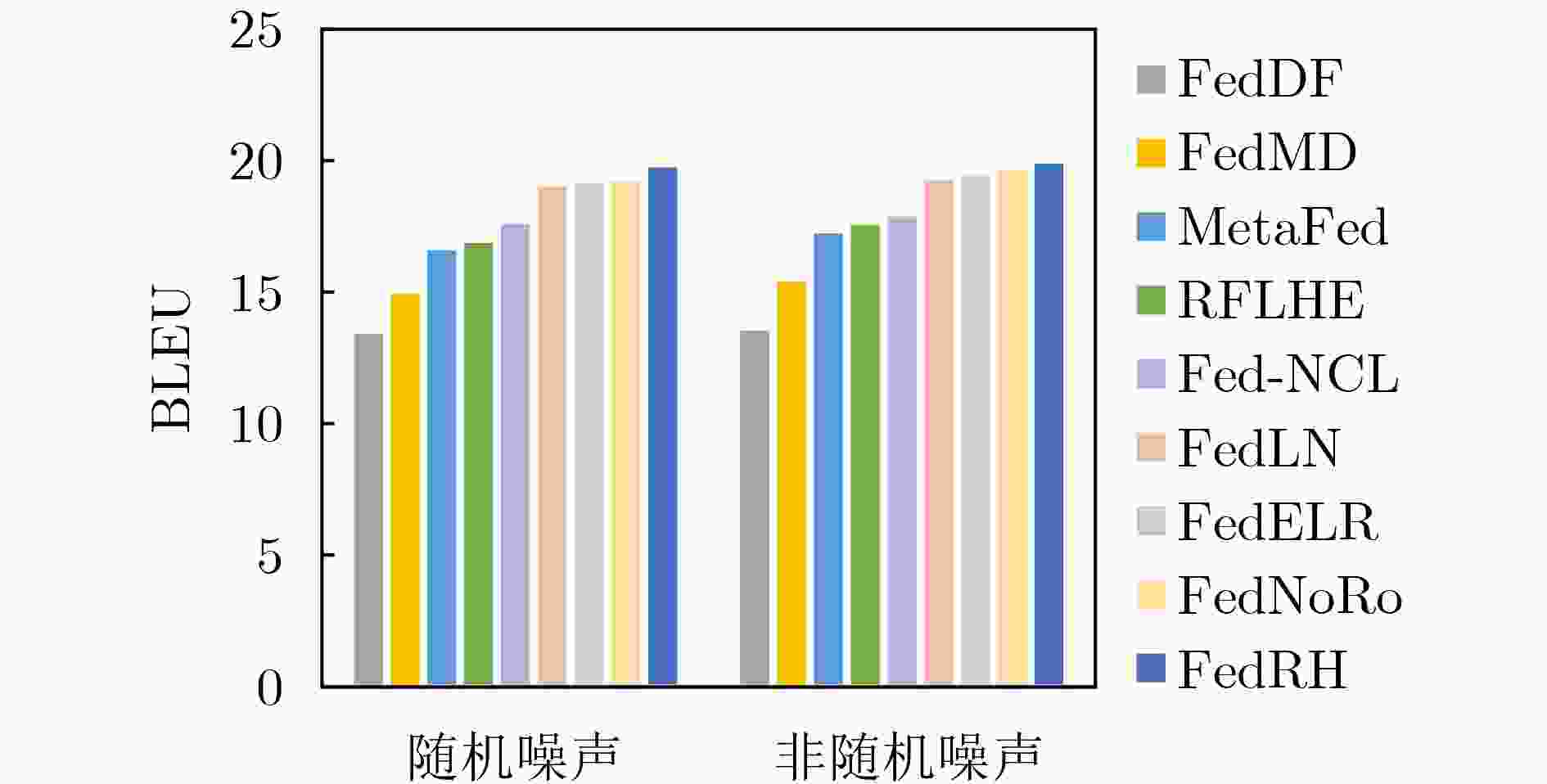

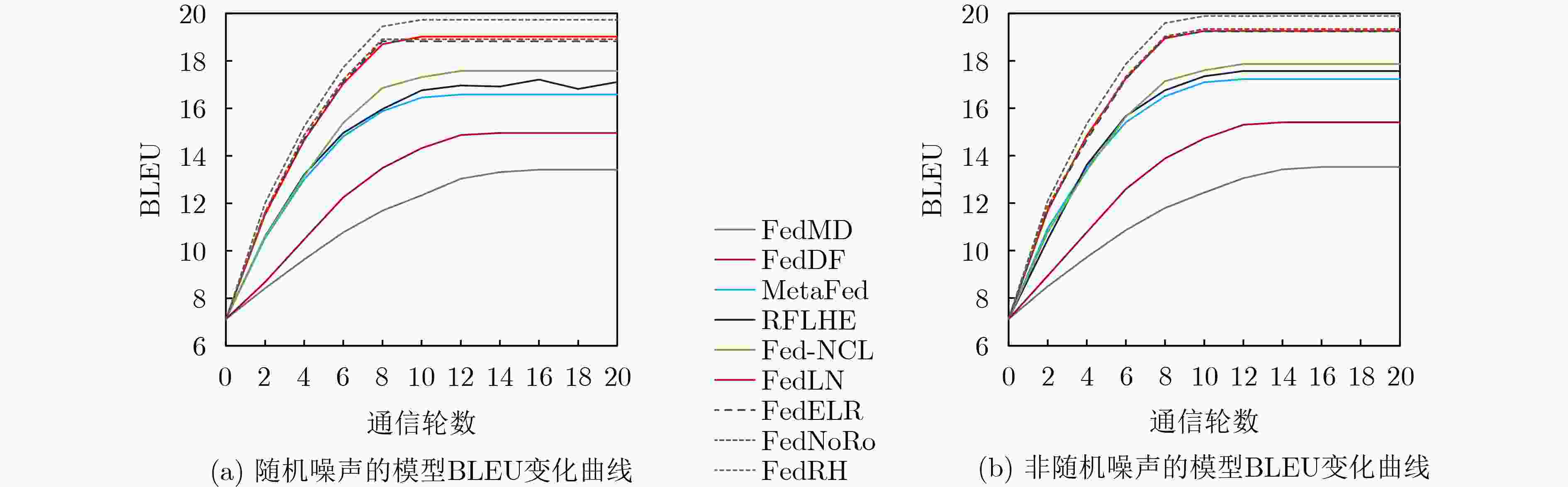

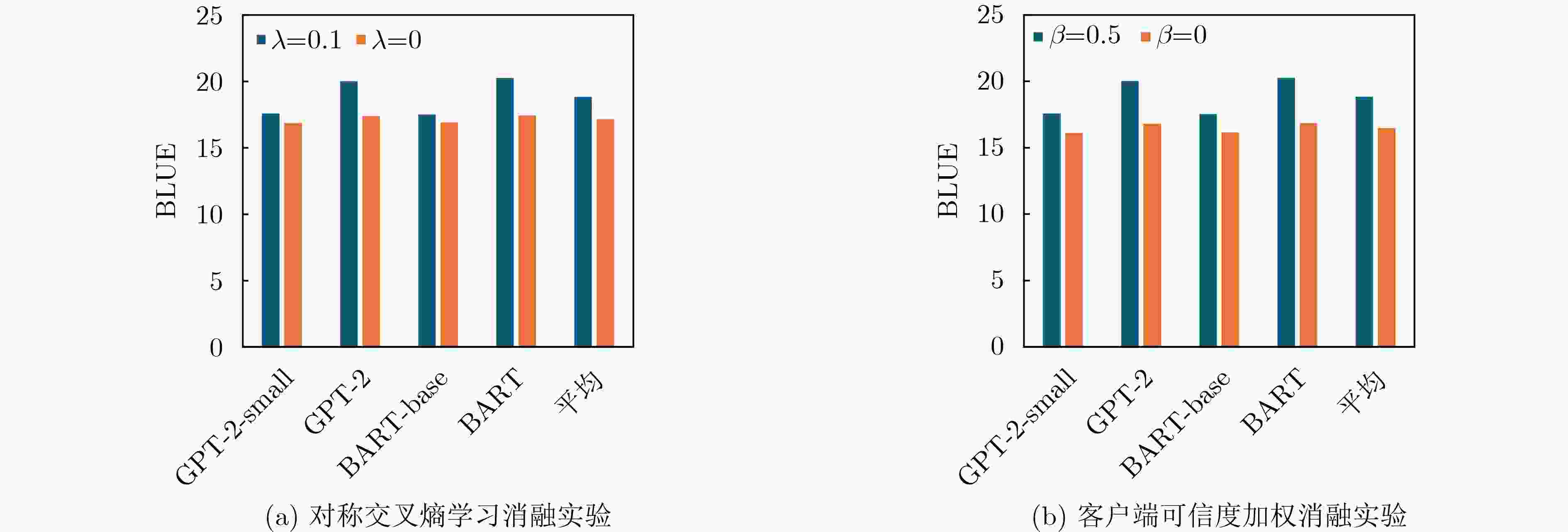

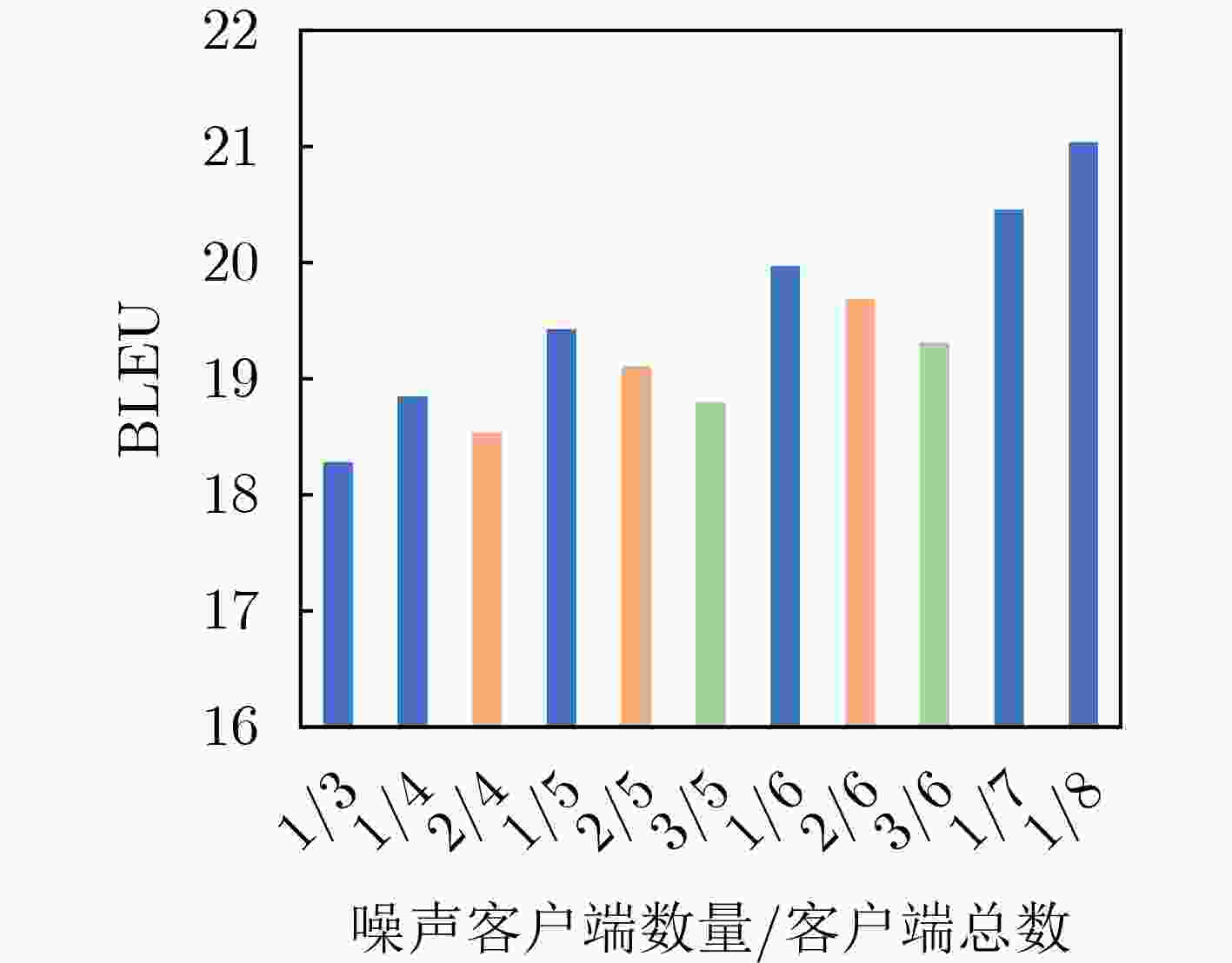

Objective To address the key challenges of client model heterogeneity, data distribution heterogeneity, and text noise in medical dialogue federated learning, this paper proposes a trustworthiness-based, noise-resistant heterogeneous medical dialogue federated learning method, termed FedRH. FedRH enhances robustness by improving the objective function, aggregation strategy, and local update process, among other components, based on credibility evaluation. Methods Model training is divided into a local training stage and a heterogeneous federated learning stage. During local training, text noise is mitigated using a symmetric cross-entropy loss function, which reduces the risk of overfitting to noisy text. In the heterogeneous federated learning stage, an adaptive aggregation mechanism incorporates clean, noisy, and heterogeneous client texts by evaluating their quality. Local parameter updates consider both local and global parameters simultaneously, enabling continuous adaptive updates that improve resistance to both random and structured (syntax/semantic) noise and model heterogeneity. The main contributions are threefold: (1) A local noise-resistant training strategy that uses symmetric cross-entropy loss to prevent overfitting to noisy text during local training; (2) A heterogeneous federated learning approach based on client trustworthiness, which evaluates each client’s text quality and learning effectiveness to compute trust scores. These scores are used to adaptively weight clients during model aggregation, thereby reducing the influence of low-quality data while accounting for text heterogeneity; (3) A local continuous adaptive aggregation mechanism, which allows the local model to integrate fine-grained global model information. This approach reduces the adverse effects of global model bias caused by heterogeneous and noisy text on local updates. Results and Discussions The effectiveness of the proposed model is systematically validated through extensive, multi-dimensional experiments. The results indicate that FedRH achieves substantial improvements over existing methods in noisy and heterogeneous federated learning scenarios ( Table 2 ,Table 3 ). The study also presents training process curves for both heterogeneous models (Fig. 3 ) and isomorphic models (Fig. 6 ), supplemented by parameter sensitivity analysis, ablation experiments, and a case study.Conclusions The proposed FedRH framework significantly enhances the robustness of federated learning for medical dialogue tasks in the presence of heterogeneous and noisy text. The main conclusions are as follows: (1) Compared to baseline methods, FedRH achieves superior performance in client-side models under heterogeneous and noisy text conditions. It demonstrates improvements across multiple metrics, including precision, recall, and factual consistency, and converges more rapidly during training. (2) Ablation experiments confirm that both the symmetric cross-entropy-based local training strategy and the credibility-weighted heterogeneous aggregation approach contribute to performance gains. -

1 联邦训练算法

输入:K个客户端的数据集$ {\bar D_1},{\bar D_2}, \cdots ,{\bar D_K} $,自适应权重$ {W_k} $,

$ {T}_{\mathrm{l}} $, $ {T}_{\mathrm{c}} $输出:本地模型$ {\varTheta }_{k} $ (1) 在每个客户端中,使用带有数据集Dk的对称交叉熵学习损失

$ {\mathcal{L}}^{\mathrm{S}\mathrm{L}} $来训练本地模型$ {\varTheta }_{k} $,共进行Ti轮(2) 对每个客户端k (并行执行): (3) 计算可信度Ck (4) 将本地模型$ {\varTheta }_{k} $和可信度Ck发送至服务器 (5) 等待服务器根据所有客户端的Ck和$ {\varTheta }_{k} $生成全局模型$ {\varTheta }_{0} $,

$ \forall k \in [1,K] $(6) $ {\varTheta }_{k} $←本地自适应聚合 ($ {\varTheta }_{0} $, $ {\varTheta }_{k} $, Wk) (7) 更新本地模型$ {\varTheta }_{k} $和自适应权重Wk (8) 经过$ {T}_{\mathrm{c}} $轮训练后返回$ {\varTheta }_{k} $ 表 1 客户端模型结构参数

表 2 在随机噪声下的性能对比

方法 BLEU ROGUE 事实一致性 B-1 B-4 R-1 R-2 Inconsistency Hallucination FedMD 19.13 9.91 37.33 22.80 21.56 40.62 FedDF 18.60 9.63 37.16 22.69 21.96 41.16 MetaFed 20.88 11.10 41.30 24.90 17.89 38.93 RFLHE 21.76 10.88 41.68 26.03 17.75 39.17 Fed-NCL 22.18 11.61 42.85 26.24 16.95 37.59 FedLN 23.38 13.02 44.16 27.69 14.53 36.41 FedELR 23.61 13.02 44.24 27.23 14.43 36.24 FedNoRo 23.38 12.88 44.09 27.01 14.77 36.65 FedRH 24.11 13.59 45.09 28.75 14.30 35.76 表 3 在非随机噪声下的性能对比

方法 BLEU ROGUE 事实一致性 B-1 B-4 R-1 R-2 Inconsistency Hallucination FedMD 19.71 10.11 38.89 24.00 23.72 44.28 FedDF 19.13 9.83 38.08 23.46 24.15 44.86 MetaFed 21.61 11.53 42.4 25.47 19.67 42.43 RFLHE 21.85 11.33 42.9 26.56 19.01 41.56 Fed-NCL 22.40 11.89 43.52 26.78 18.15 39.88 FedLN 23.77 13.07 44.86 28.25 14.92 38.63 FedELR 23.12 13.42 44.32 27.64 14.98 38.40 FedNoRo 23.46 12.93 44.66 27.87 14.98 38.82 FedRH 24.32 13.64 46.01 29.34 14.36 37.94 -

[1] MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-efficient learning of deep networks from decentralized data[C]. The 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, USA, 2017: 1273–1282. [2] LI Tian, SAHU A K, ZAHEER M, et al. Federated optimization in heterogeneous networks[C]. The Third Conference on Machine Learning and Systems, Austin, USA, 2020, 2: 429–450. [3] TAM K, LI Li, HAN Bo, et al. Federated noisy client learning[J]. IEEE Transactions on Neural Networks and Learning Systems, 2025, 36(1): 1799–1812. doi: 10.1109/TNNLS.2023.3336050. . [4] XIE Mingkun and HUANG Shengjun. Partial multi-label learning with noisy label identification[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 44(7): 3676–3687. doi: 10.1109/TPAMI.2021.3059290. [5] ROLNICK D, VEIT A, BELONGIE S, et al. Deep learning is robust to massive label noise[C]. 6th International Conference on Learning Representations, Vancouver, Canada, 2017. [6] ANG Fan, CHEN Li, ZHAO Nan, et al. Robust federated learning with noisy communication[J]. IEEE Transactions on Communications, 2020, 68(6): 3452–3464. doi: 10.1109/TCOMM.2020.2979149. [7] WANG Zhuowei, ZHOU Tianyi, LONG Guodong, et al. FedNoiL: A simple two-level sampling method for federated learning with noisy labels[J]. arXiv preprint arXiv: 2205.10110, 2022.WANG Zhuowei, ZHOU Tianyi, LONG Guodong, et al. FedNoiL: A simple two-level sampling method for federated learning with noisy labels[J]. arXiv preprint arXiv: 2205.10110, 2022. [8] ZHAO Xinyan, CHEN Liangwei, and CHEN Huanhuan. A weighted heterogeneous graph-based dialog system[J]. IEEE Transactions on Neural Networks and Learning Systems, 2023, 34(8): 5212–5217. doi: 10.1109/TNNLS.2021.3124640. [9] SONG Wenfeng, HOU Xia, LI Shuai, et al. An intelligent virtual standard patient for medical students training based on oral knowledge graph[J]. IEEE Transactions on Multimedia, 2023, 25: 6132–6145. doi: 10.1109/TMM.2022.3205456. [10] YAN Lian, GUAN Yi, WANG Haotian, et al. EIRAD: An evidence-based dialogue system with highly interpretable reasoning path for automatic diagnosis[J]. IEEE Journal of Biomedical and Health Informatics, 2024, 28(10): 6141–6154. doi: 10.1109/JBHI.2024.3426922. [11] FALLAH A, MOKHTARI A, and OZDAGLAR A. Personalized federated learning with theoretical guarantees: A model-agnostic meta-learning approach[C]. The 34th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2020: 300. doi: 10.5555/3495724.3496024. [12] FINN C, ABBEEL P, and LEVINE S. Model-agnostic meta-learning for fast adaptation of deep networks[C]. The 34th International Conference on Machine Learning, Sydney, Australia, 2017: 1126–1135. doi: 10.5555/3305381.3305498. [13] COLLINS L, HASSANI H, MOKHTARI A, et al. Exploiting shared representations for personalized federated learning[C]. The 38th International Conference on Machine Learning, 2021: 2089–2099. [14] TAN Yan, LONG Guodong, LIU Lu, et al. FedProto: Federated prototype learning across heterogeneous clients[C]. The 36th AAAI Conference on Artificial Intelligence, 2022: 8432–8440. doi: 10.1609/aaai.v36i8.20819. [15] LI Daliang and WANG Junpu. FedMD: Heterogenous federated learning via model distillation[J]. arXiv preprint arXiv: 1910.03581, 2019.LI Daliang and WANG Junpu. FedMD: Heterogenous federated learning via model distillation[J]. arXiv preprint arXiv: 1910.03581, 2019. [16] LIN Tao, KONG Lingjing, STICH S U, et al. Ensemble distillation for robust model fusion in federated learning[C]. The 34th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2020: 198. doi: 10.5555/3495724.3495922. [17] WU Chuhan, WU Fangzhao, LYU Lingjuan, et al. Communication-efficient federated learning via knowledge distillation[J]. Nature Communications, 2022, 13(1): 2032. doi: 10.1038/s41467-022-29763-x. [18] CHEN Yiqiang, LU Wang, QIN Xin, et al. MetaFed: Federated learning among federations with cyclic knowledge distillation for personalized healthcare[J]. IEEE Transactions on Neural Networks and Learning Systems, 2024, 35(11): 16671–16682. doi: 10.1109/TNNLS.2023.3297103. [19] CHENG Sijie, WU Jingwen, XIAO Yanghua, et al. FedGEMS: Federated learning of larger server models via selective knowledge fusion[C]. The Tenth International Conference on Learning Representations, 2022: 117–135. doi: 10.18653/v1/2021.findings-naacl.10. [20] GHOSH A, HONG J, YIN Dong, et al. Robust federated learning in a heterogeneous environment[J]. arXiv preprint arXiv: 1906.06629, 2019.GHOSH A, HONG J, YIN Dong, et al. Robust federated learning in a heterogeneous environment[J]. arXiv preprint arXiv: 1906.06629, 2019. [21] TSOUVALAS V, SAEED A, OZCELEBI T, et al. Labeling chaos to learning harmony: Federated learning with noisy labels[J]. ACM Transactions on Intelligent Systems and Technology, 2024, 15(2): 22. doi: 10.1145/3626242. [22] YANG Xin, YU Hao, GAO Xin, et al. Federated continual learning via knowledge fusion: A survey[J]. IEEE Transactions on Knowledge and Data Engineering, 2024, 36(8): 3832–3850. doi: 10.1109/TKDE.2024.3363240. [23] YU Lang, GE Lina, WANG Guanghui, et al. PI-Fed: Continual federated learning with parameter-level importance aggregation[J]. IEEE Internet of Things Journal, 2024, 11(22): 37187–37199. doi: 10.1109/JIOT.2024.3440029. [24] LI Dongdong, HUANG Nan, WANG Zhe, et al. Personalized federated continual learning for task-incremental biometrics[J]. IEEE Internet of Things Journal, 2023, 10(23): 20776–20788. doi: 10.1109/JIOT.2023.3284843. [25] WANG Yisen, MA Xingjun, CHEN Zaiyi, et al. Symmetric cross entropy for robust learning with noisy labels[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea (South), 2019: 322–330. doi: 10.1109/ICCV.2019.00041. [26] FANG Xiuwen and YE Mang. Robust federated learning with noisy and heterogeneous clients[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, USA, 2022: 10062–10071. doi: 10.1109/CVPR52688.2022.00983. [27] ZHANG Jianqing, HUA Yang, WANG Hao, et al. FedALA: Adaptive local aggregation for personalized federated learning[C]. The 37th AAAI Conference on Artificial Intelligence, Washington, USA, 2023: 11237–11244. doi: 10.1609/aaai.v37i9.26330. [28] ZENG Guangtao, YANG Wenmian, JU Zeqian, et al. MedDialog: Large-scale medical dialogue datasets[C]. The 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), 2020: 9241–9250. doi: 10.18653/v1/2020.emnlp-main.743. [29] WANG Xiao, LIU Qin, GUI Tao, et al. TextFlint: Unified multilingual robustness evaluation toolkit for natural language processing[C]. Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing: System Demonstrations, 2021: 347–355. doi: 10.18653/v1/2021.acl-demo.41. [30] RADFORD A, WU J, CHILD R, et al. Language models are unsupervised multitask learners[R]. OpenAI blog, 2019. [31] LEWIS M, LIU Yinhan, GOYAL N, et al. BART: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension[C]. The 58th Annual Meeting of the Association for Computational Linguistics, 2020: 7871–7880. doi: 10.18653/v1/2020.acl-main.703. [32] KINGMA D P and BA J. Adam: A method for stochastic optimization[C]. 3rd International Conference on Learning Representations, San Diego, USA, 2015. [33] PU Ruizhi, YU Lixing, ZHAN Shaojie, et al. FedELR: When federated learning meets learning with noisy labels[J]. Neural Networks, 2025, 187: 107275. doi: 10.1016/j.neunet.2025.107275. [34] WU Nannan, YU Li, JIANG Xuefeng, et al. FedNoRo: Towards noise-robust federated learning by addressing class imbalance and label noise heterogeneity[C]. The Thirty-Second International Joint Conference on Artificial Intelligence, Macao, China, 2023: 492. doi: 10.24963/ijcai.2023/492. -

下载:

下载:

下载:

下载: