Depression Screening Method Driven by Global-Local Feature Fusion

-

摘要: 目前,基于机器视觉的抑郁症识别筛查的方法往往忽略脸部的局部特征,在实际应用中一旦脸部被部分遮挡,会严重影响筛查的准确性,甚至无法进行有效筛查。为此,该文提出一种边缘视觉的抑郁症筛查方法,该方法通过构建一个全局-局部融合注意力网络同步识别被筛查对象的面部表情和眼部局部特征。为了提高对眼部局部特征的提取能力,该文在网络中引入卷积注意力模块,强化对眼动轨迹特征的捕捉能力。实验结果表明,该方法在抑郁症识别上表现优异,在自建数据集上(包含脸部遮挡情况)的精确率、召回率、F1分数分别达0.76, 0.78和0.77,较最新方法召回率提升10.76%,在AVEC2013和AVEC2014数据集上,平均绝对误差(MAE)分别低至5.74和5.79,较最新方法提升3.53%和1.2%。此外,通过可视化分析直观展现了模型对面部不同区域的关注度,进一步验证了方法的有效性和合理性。该方法部署于边缘设备后,平均处理时延不超过17.56 frame/s,为抑郁症筛查提供新方案。Abstract:

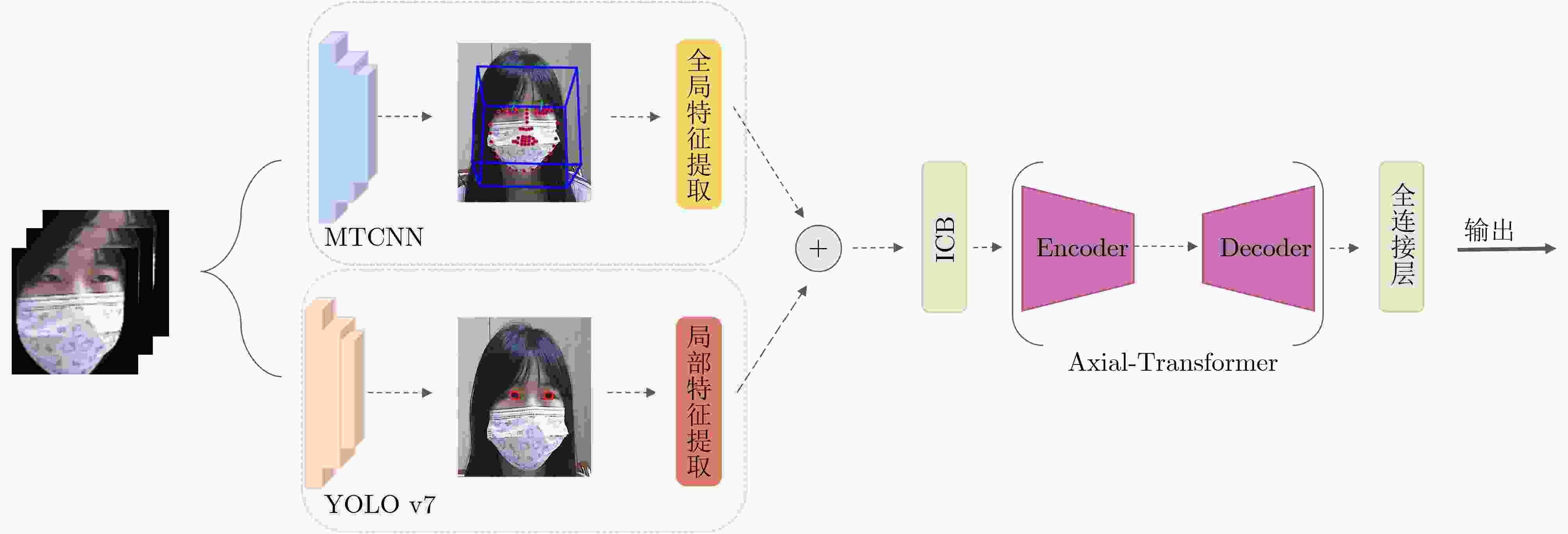

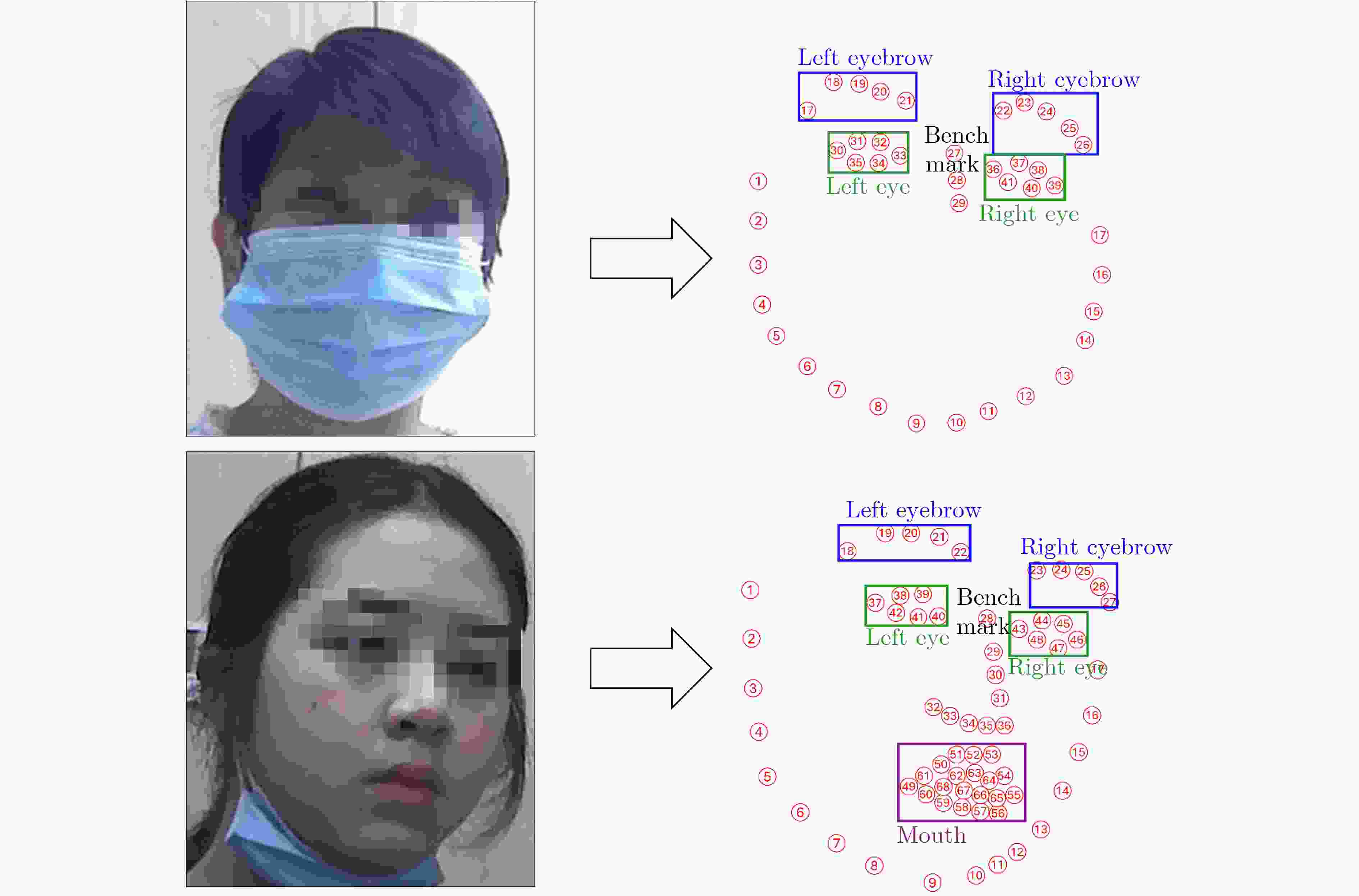

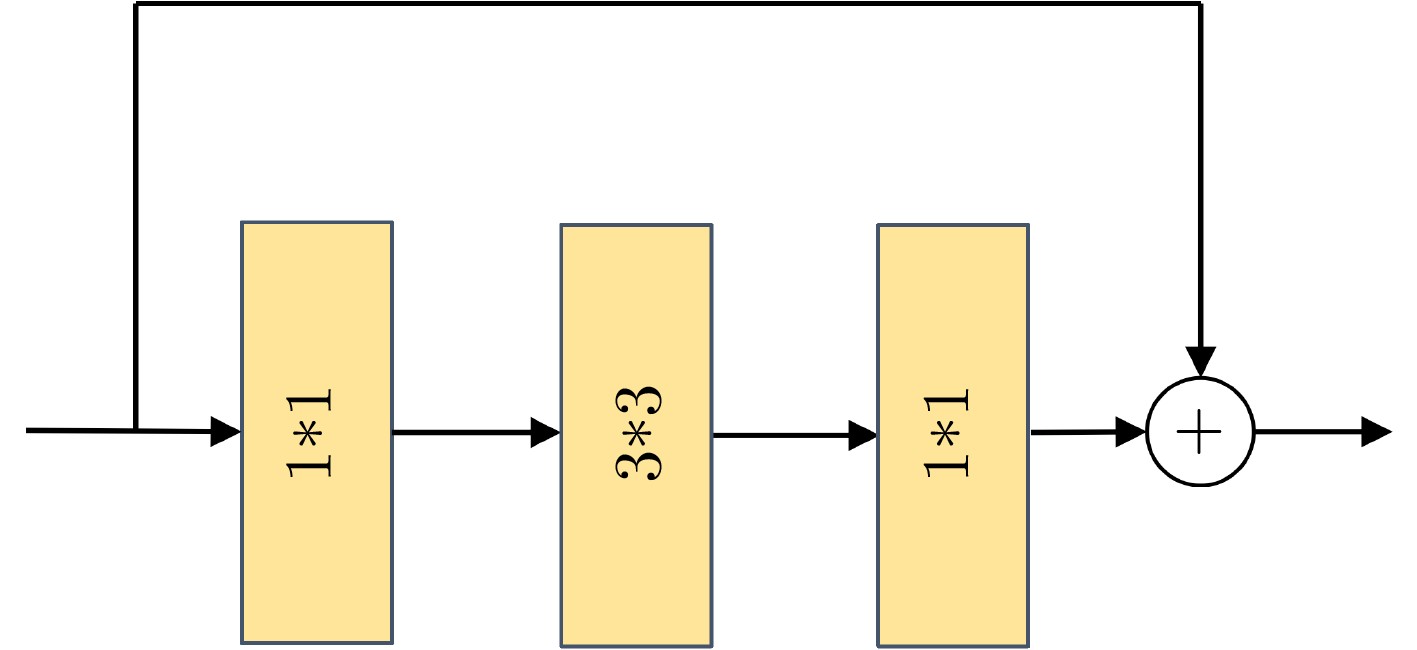

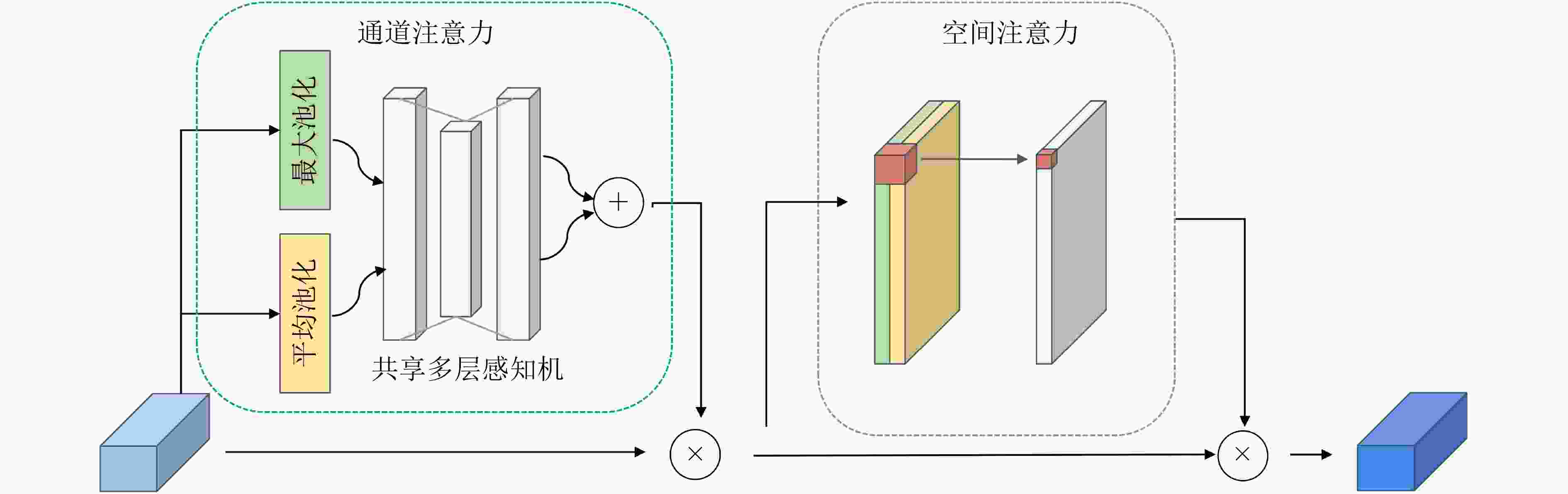

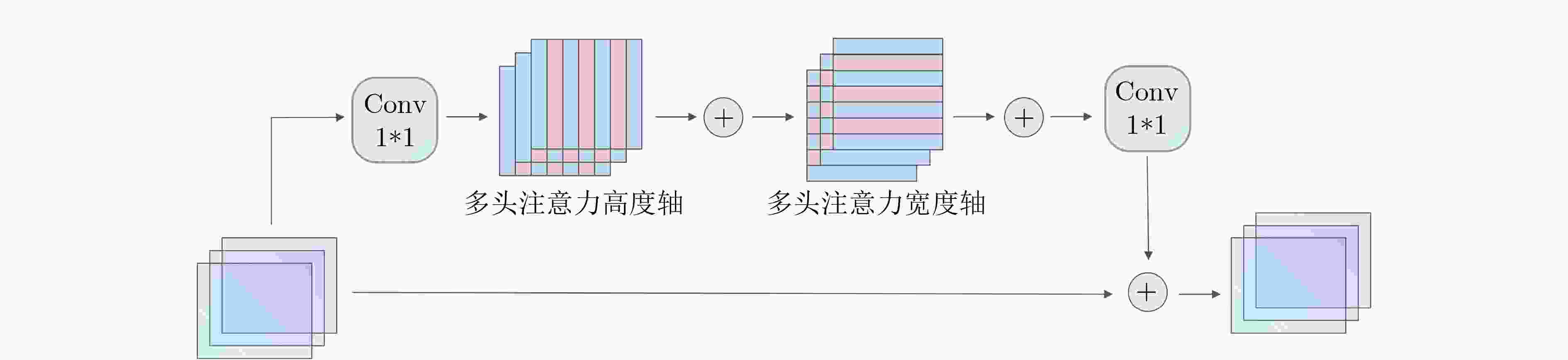

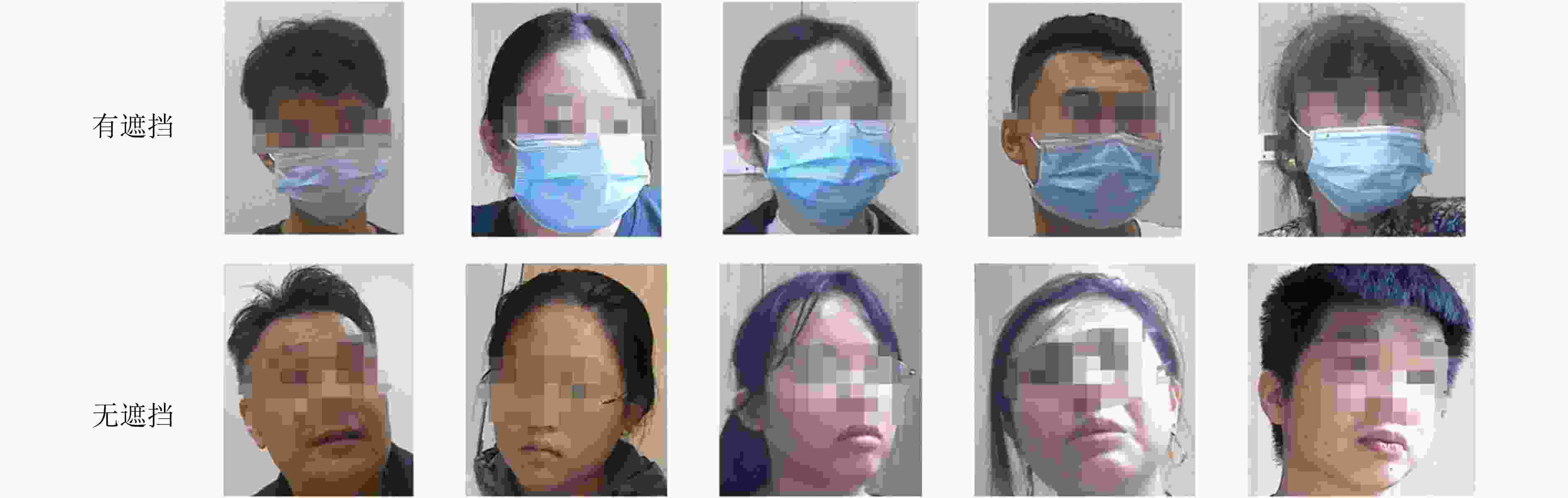

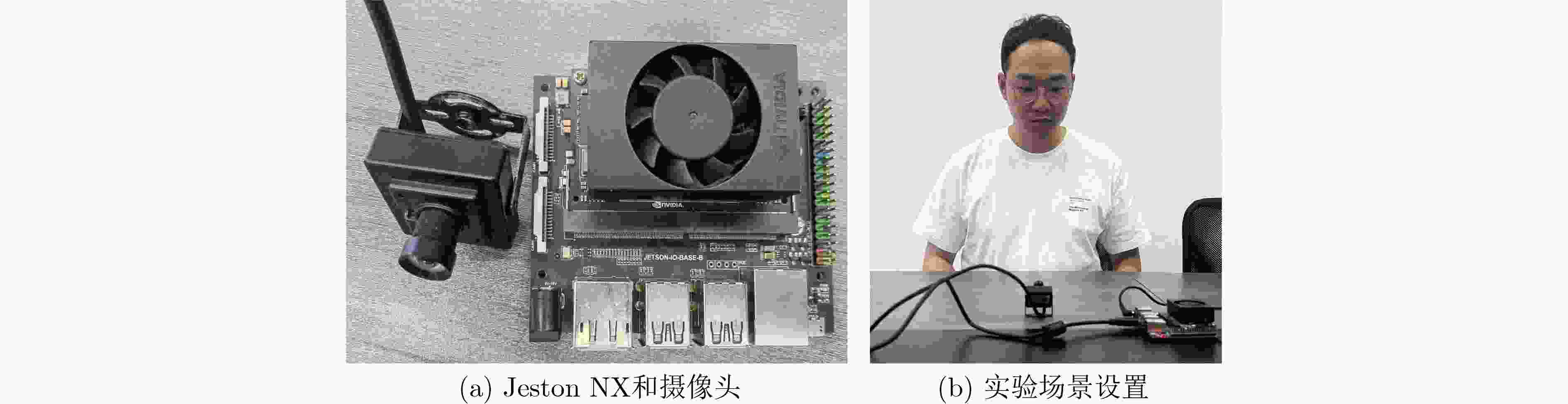

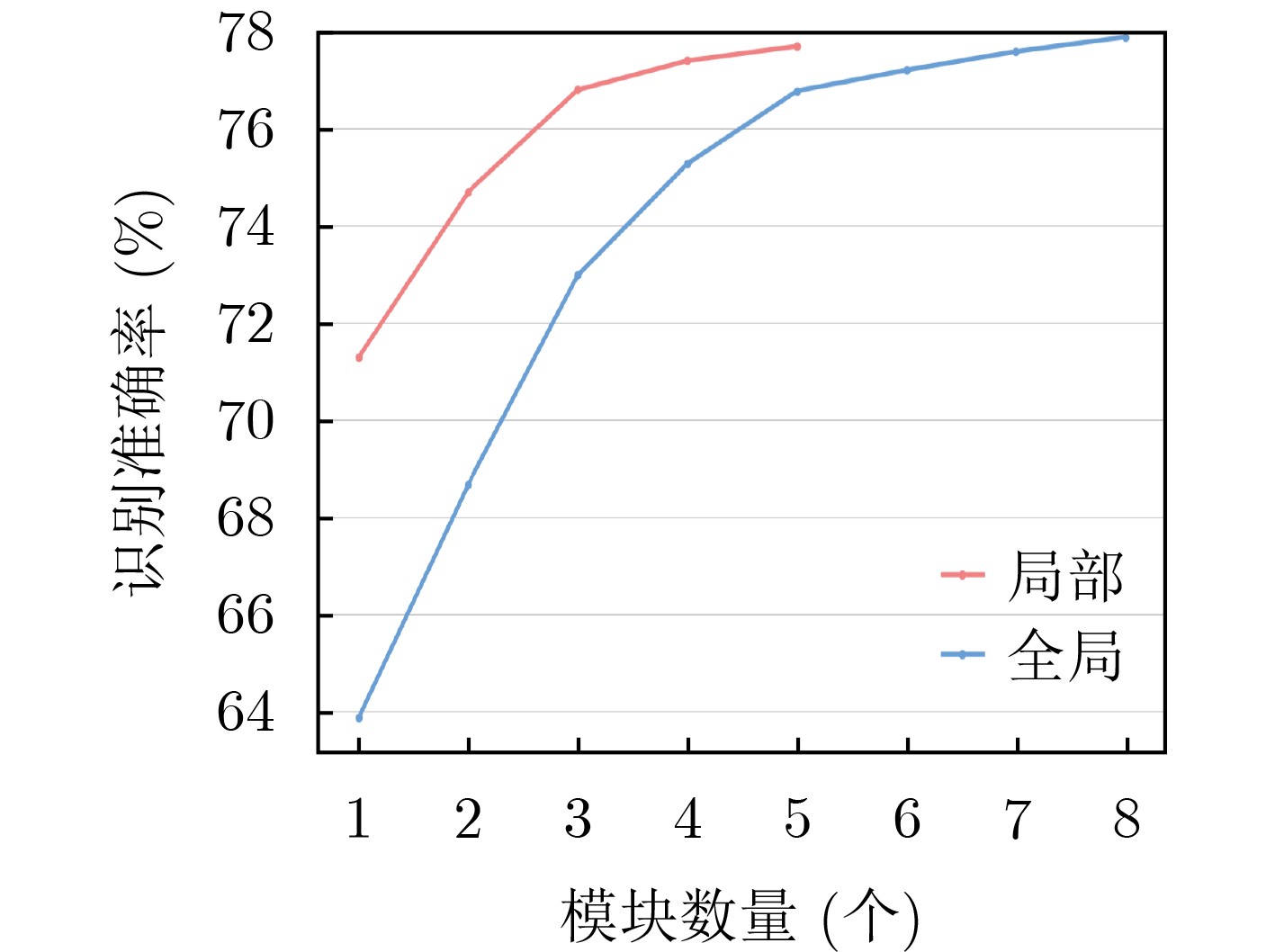

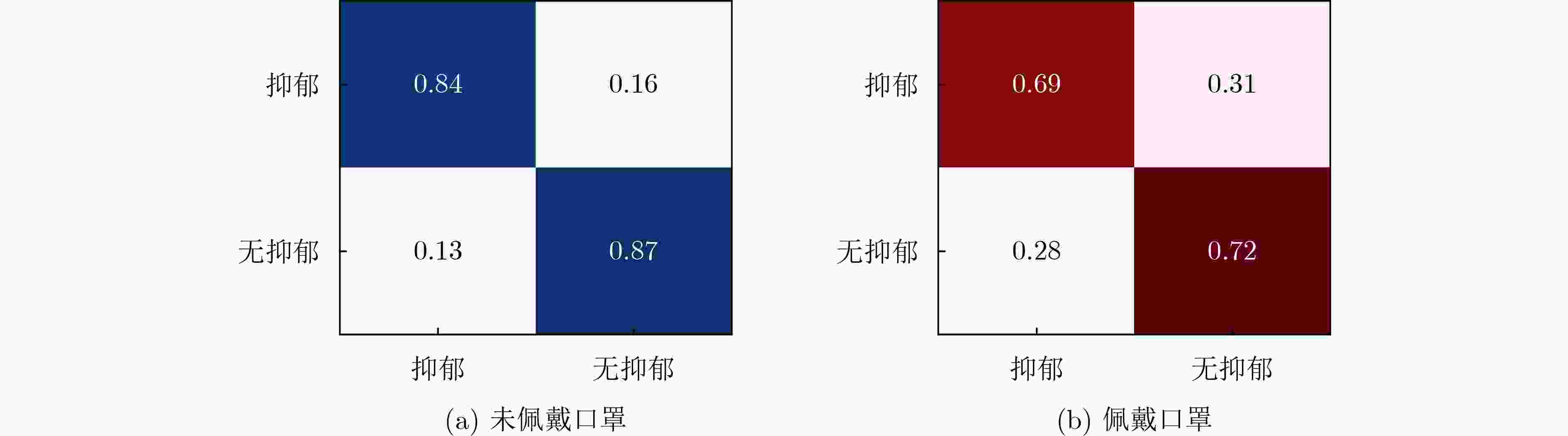

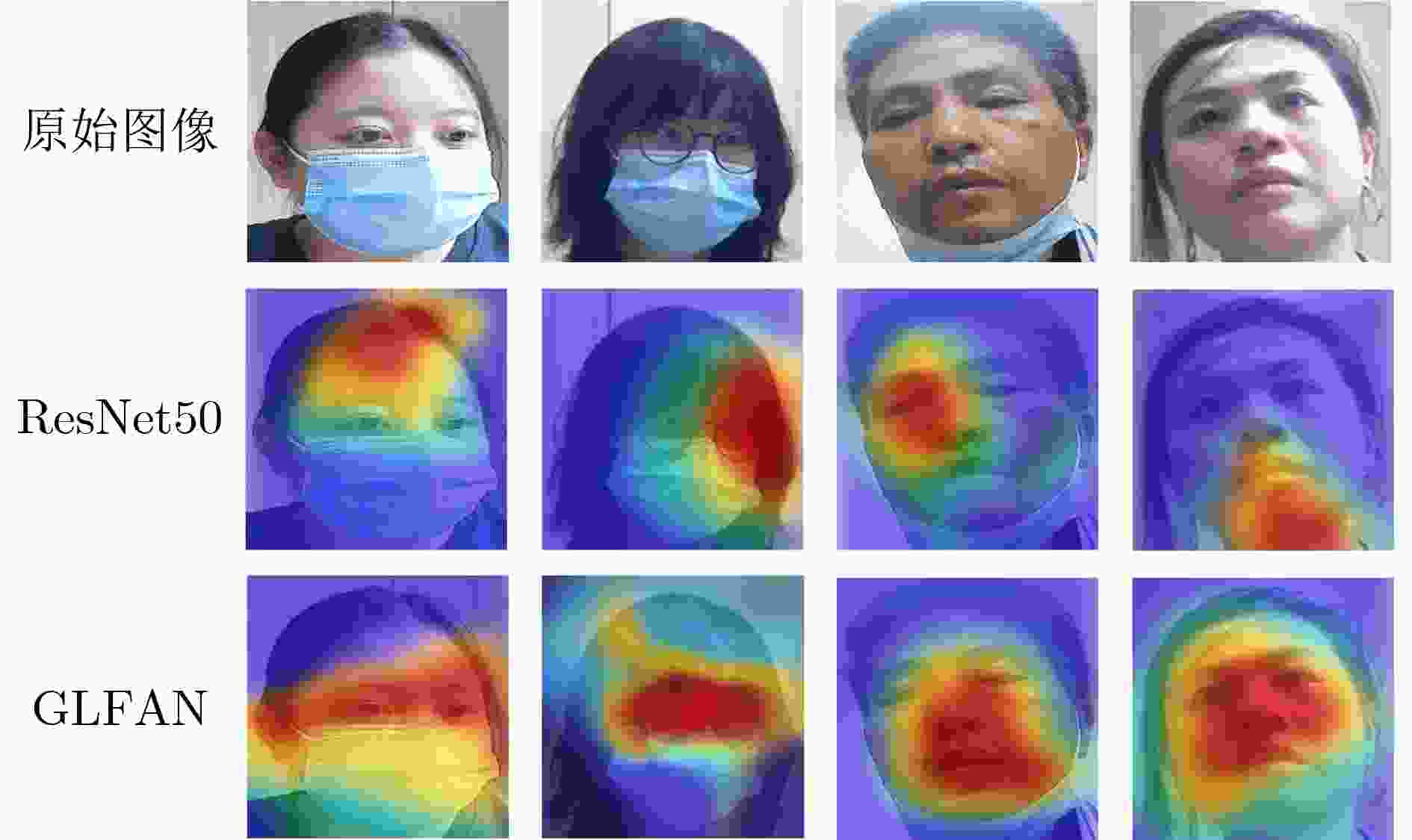

Objective Depression is a globally prevalent mental disorder that poses a serious threat to the physical and mental health of millions of individuals. Early screening and diagnosis are essential to reducing severe consequences such as self-harm and suicide. However, conventional questionnaire-based screening methods are limited by their dependence on the reliability of respondents’ answers, their difficulty in balancing efficiency with accuracy, and the uneven distribution of medical resources. New auxiliary screening approaches are therefore needed. Existing Artificial Intelligence (AI) methods for depression detection based on facial features primarily emphasize global expressions and often overlook subtle local cues such as eye features. Their performance also declines in scenarios where partial facial information is obscured, for instance by masks, and they raise privacy concerns. This study proposes a Global-Local Fusion Axial Network (GLFAN) for depression screening. By jointly extracting global facial and local eye features, this approach enhances screening accuracy and robustness under complex conditions. A corresponding dataset is constructed, and experimental evaluations are conducted to validate the method’s effectiveness. The model is deployed on edge devices to improve privacy protection while maintaining screening efficiency, offering a more objective, accurate, efficient, and secure depression screening solution that contributes to mitigating global mental health challenges. Methods To address the challenges of accuracy and efficiency in depression screening, this study proposes GLFAN. For long-duration consultation videos with partial occlusions such as masks, data preprocessing is performed using OpenFace 2.0 and facial keypoint algorithms, combined with peak detection, clustering, and centroid search strategies to segment the videos into short sequences capturing dynamic facial changes, thereby enhancing data validity. At the model level, GLFAN adopts a dual-branch parallel architecture to extract global facial and local eye features simultaneously. The global branch uses MTCNN for facial keypoint detection and enhances feature extraction under occlusion using an inverted bottleneck structure. The local branch detects eye regions via YOLO v7 and extracts eye movement features using a ResNet-18 network integrated with a convolutional attention module. Following dual-branch feature fusion, an integrated convolutional module optimizes the representation, and classification is performed using an axial attention network. Results and Discussions The performance of GLFAN is evaluated through comprehensive, multi-dimensional experiments. On the self-constructed depression dataset, high accuracy is achieved in binary classification tasks, and non-depression and severe depression categories are accurately distinguished in four-class classification. Under mask-occluded conditions, a precision of 0.72 and a precision of 0.690 are obtained for depression detection. Although these values are lower than the precision of 0.87 and precision of 0.840 observed under non-occluded conditions, reliable screening performance is maintained. Compared with other advanced methods, GLFAN achieves higher recall and F1 scores. On the public AVEC2013 and AVEC2014 datasets, the model achieves lower Mean Absolute Error (MAE) values and shows advantages in both short- and long-sequence video processing. Heatmap visualizations indicate that GLFAN dynamically adjusts its attention according to the degree of facial occlusion, demonstrating stronger adaptability than ResNet-50. Edge device tests further confirm that the average processing delay remains below 17.56 milliseconds per frame, and stable performance is maintained under low-bandwidth conditionsThe performance of GLFAN is evaluated through comprehensive, multi-dimensional experiments. On the self-constructed depression dataset, high accuracy is achieved in binary classification tasks, and non-depression and severe depression categories are accurately distinguished in four-class classification. Under mask-occluded conditions, a precision of 0.72 and a recall of 0.690 are obtained for depression detection. Although these values are lower than the precision of 0.87 and recall of 0.840 observed under non-occluded conditions, reliable screening performance is maintained. Compared with other advanced methods, GLFAN achieves higher recall and F1 scores. On the public AVEC2013 and AVEC2014 datasets, the model achieves lower Mean Absolute Error (MAE) values and shows advantages in both short- and long-sequence video processing. Heatmap visualizations indicate that GLFAN dynamically adjusts its attention according to the degree of facial occlusion, demonstrating stronger adaptability than ResNet-50. Edge device tests further confirm that the average processing delay remains below 17.56 frame/s, and stable performance is maintained under low-bandwidth conditions. Conclusions This study proposes a depression screening approach based on edge vision technology. A lightweight, end-to-end GLFAN is developed to address the limitations of existing screening methods. The model integrates global facial features extracted via MTCNN with local eye-region features captured by YOLO v7, followed by effective feature fusion and classification using an Axial Transformer module. By emphasizing local eye-region information, GLFAN enhances performance in occluded scenarios such as mask-wearing. Experimental validation using both self-constructed and public datasets demonstrates that GLFAN reduces missed detections and improves adaptability to short-duration video inputs compared with existing models. Grad-CAM visualizations further reveal that GLFAN prioritizes eye-region features under occluded conditions and shifts focus to global facial features when full facial information is available, confirming its context-specific adaptability. The model has been successfully deployed on edge devices, offering a lightweight, efficient, and privacy-conscious solution for real-time depression screening. -

1 集中点搜索算法

输入:帧序列集合$ S $ 初始化:初始化$ {S}_{c}\in S $, 当前循环层级$ \mathrm{I}\mathrm{n}\mathrm{d}\mathrm{e}\mathrm{x}=0 $。 REPEAT (1)将输入帧平均分成两段$ {S}_{0},{S}_{1}=\mathrm{ }\mathrm{h}\mathrm{a}\mathrm{l}\mathrm{f}\mathrm{S}\mathrm{p}\mathrm{l}\mathrm{i}\mathrm{t}\left({S}_{c}\right) $; (2)保留偏移相对较大的一段$ {S}_{c}=\mathrm{ }\mathrm{m}\mathrm{a}\mathrm{x}\_\mathrm{o}\mathrm{f}\mathrm{f}\mathrm{s}\mathrm{e}\mathrm{t}\left(\mathrm{a}\mathrm{b}\mathrm{s}\right({S}_{0},{S}_{1}\left)\right) $; (3)$ \mathrm{I}\mathrm{n}\mathrm{d}\mathrm{e}\mathrm{x}+= 1 $; UNTIL $ \mathrm{l}\mathrm{e}\mathrm{n}\left({S}_{c}\right) = 1 $; 输出:输出集中点所在的帧集合$ {S}_{c}; $ 表 1 抑郁症数据集样本分布表

抑郁程度 不戴口罩样本数 戴口罩样本数 视频总时长(min) 轻度抑郁 5 49 366 中度抑郁 7 46 269 重度抑郁 7 30 346 表 2 抑郁症状自评问卷

序号 提问内容 1 你的胃口和体重这两周里有什么变化? 2 最近身体感觉怎么样,有什么异样? 3 你最近的睡眠情况怎么样? 4 你最近和朋友多久交流一次?你最好的朋友如何评价你? 5 你最近的记性如何?你经常忘记事情吗? 6 你最近学习工作中感兴趣的是什么?你怎么集中注意力? 7 你什么时候会感到乏力或缺少动力?经常发生吗? 8 你有想过自杀或者自残之类?如果有是什么原因导致? 9 你最近的情绪状况如何?低落、抑郁、绝望? 10 你现在担心什么事情?你要怎么处理? 11 你什么时候感觉自己是一个非常糟糕的失败者?

或者你有没有让你自己或者你的家人失望?12 你何时感到自己的行动、思考和说话变慢了? 表 3 距离度量方法的消融实验结果表

距离策略 精确率 召回率 F1分数 余弦相似度 0.57 0.66 0.611 7 曼哈顿距离 0.58 0.83 0.682 8 切比雪夫距离 0.72 0.66 0.688 6 欧式距离 0.75 0.96 0.857 1 表 4 不同分支方法的消融实验结果表

方法 精确率 召回率 F1分数 仅使用全局人脸分支 0.74 0.68 0.7059 仅使用局部分支 0.63 0.58 0.6034 使用Transformer分类器 0.71 0.74 0.7242 GLFAN 0.76 0.78 0.7721 表 5 GLFAN与现有方法在本文数据集上的实验对照

表 6 GLFAN与其他先进模型在AVEC2013和AVEC2014数据集上的实验对照

方法 AVEC2013 AVEC2014 RMSE MAE RMSE MAE Song等人[22] 8.10 6.16 7.15 5.95 Shang等人[33] 8.20 6.38 7.84 6.08 Zhou等人[34] 8.28 6.20 9.55 7.47 De Melo等人[34] 7.55 6.24 7.65 6.06 Pan等人[36] 7.26 5.97 7.30 5.99 Casado等人[37] 8.01 6.43 8.49 6.57 Zhang等人[38] 8.08 6.14 7.93 6.35 Pan等人[39] 7.98 6.15 7.75 6.00 Dai等人[40] 8.17 – 8.27 – Xu等人[41] 7.57 5.95 7.18 5.86 GLFAN 7.34 5.74 7.36 5.79 表 7 不同视频长度下的抑郁症检测平均时延(s)

网络带宽(Mbit/(s·Hz)) 5~7 min 7~9 min 9~11 min 11~13 min 20 316 445 533 659 10 453 572 739 902 5 520 613 890 1 032 1 732 827 1 059 1 337 -

[1] WHO. Depression and other common mental disorders: Global health estimates[R]. 2017. [2] HERRMAN H, PATEL V, KIELING C, et al. Time for united action on depression: A lancet–world psychiatric association commission[J]. The Lancet, 2022, 399(10328): 957–1022. doi: 10.1016/S0140-6736(21)02141-3. [3] THAPAR A, EYRE O, PATEL V, et al. Depression in young people[J]. The Lancet, 2022, 400(10352): 617–631. doi: 10.1016/S0140-6736(22)01012-1. [4] ROBERSON K and CARTER R T. The relationship between race-based traumatic stress and the Trauma Symptom Checklist: Does racial trauma differ in symptom presentation?[J]. Traumatology, 2022, 28(1): 120–128. doi: 10.1037/trm0000306. [5] CARROZZINO D, PATIERNO C, PIGNOLO C, et al. The concept of psychological distress and its assessment: A clinimetric analysis of the SCL-90-R[J]. International Journal of Stress Management, 2023, 30(3): 235–248. doi: 10.1037/str0000280. [6] HAJDUSKA-DÉR B, KISS G, SZTAHÓ D, et al. The applicability of the Beck Depression Inventory and Hamilton Depression Scale in the automatic recognition of depression based on speech signal processing[J]. Frontiers in Psychiatry, 2022, 13: 879896. doi: 10.3389/fpsyt.2022.879896. [7] LEE A and PARK J. Diagnostic test accuracy of the beck depression inventory for detecting major depression in adolescents: A systematic review and meta-analysis[J]. Clinical Nursing Research, 2022, 31(8): 1481–1490. doi: 10.1177/10547738211065105. [8] 张五芳, 马宁, 王勋, 等. 2020年全国严重精神障碍患者管理治疗现状分析[J]. 中华精神科杂志, 2022, 55(2): 122–128. doi: 10.3760/cma.j.cn113661-20210818-00252.ZHANG Wufang, MA Ning, WANG Xun, et al. Management and services for psychosis in the People′s Republic of China in 2020[J]. Chinese Journal of Psychiatry, 2022, 55(2): 122–128. doi: 10.3760/cma.j.cn113661-20210818-00252. [9] BABU N V and KANAGA E G M. Sentiment analysis in social media data for depression detection using artificial intelligence: A review[J]. SN Computer Science, 2022, 3(1): 74. doi: 10.1007/s42979-021-00958-1. [10] EID M M, YUNDONG W, MENSAH G B, et al. Treating psychological depression utilising artificial intelligence: AI for precision medicine-focus on procedures[J]. Mesopotamian Journal of Artificial Intelligence in Healthcare, 2023, 2023: 76–81. doi: 10.58496/MJAIH/2023/015. [11] SANCHEZ A, VAZQUEZ C, MARKER C, et al. Attentional disengagement predicts stress recovery in depression: An eye-tracking study[J]. Journal of Abnormal Psychology, 2013, 122(2): 303–313. doi: 10.1037/a0031529. [12] ZHANG Kaipeng, ZHANG Zhanpeng, LI Zhifeng, et al. Joint face detection and alignment using multitask cascaded convolutional networks[J]. IEEE signal processing letters, 2016, 23(10): 1499–1503. doi: 10.1109/LSP.2016.2603342. [13] WANG Yang, WANG Hongyuan, and XIN Zihao. Efficient detection model of steel strip surface defects based on YOLO-V7[J]. IEEE Access, 2022, 10: 133936–133944. doi: 10.1109/ACCESS.2022.3230894. [14] HO J, KALCHBRENNER N, WEISSENBORN D, et al. Axial attention in multidimensional transformers[J]. arXiv preprint arXiv: 1912.12180, 2019. [15] LIU Zhuang, MAO Hanzi, WU Chaoyuan, et al. A ConvNet for the 2020s[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 11966–11976. doi: 10.1109/CVPR52688.2022.01167. [16] WOO S, PARK J, LEE J Y, et al. CBAM: Convolutional block attention module[C]. The 15th European Conference on Computer Vision (ECCV), Munich, Germany, 2018: 3–19. doi: 10.1007/978-3-030-01234-2_1. [17] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. [18] SELVARAJU R R, COGSWELL M, DAS A, et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization[C]. The IEEE International Conference on Computer Vision, Venice, Italy, 2017: 618–626. doi: 10.1109/ICCV.2017.74. [19] ZHOU Xiuzhuang, JIN Kai, SHANG Yuanyuan, et al. Visually interpretable representation learning for depression recognition from facial images[J]. IEEE Transactions on Affective Computing, 2020, 11(3): 542–552. doi: 10.1109/TAFFC.2018.2828819. [20] DE MELO W C, GRANGER E, and HADID A. Depression detection based on deep distribution learning[C]. 2019 IEEE International Conference on Image Processing (ICIP), Taipei, China, 2019: 4544–4548. doi: 10.1109/ICIP.2019.8803467. [21] DE MELO W C, GRANGER E, and LOPEZ M B. Encoding temporal information for automatic depression recognition from facial analysis[C]. ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 2020: 1080–1084. doi: 10.1109/ICASSP40776.2020.9054375. [22] SONG Siyang, JAISWAL S, SHEN Linlin, et al. Spectral representation of behaviour primitives for depression analysis[J]. IEEE Transactions on Affective Computing, 2022, 13(2): 829–844. doi: 10.1109/TAFFC.2020.2970712. [23] 于明, 徐心怡, 师硕, 等. 基于面部深度空时特征的抑郁症识别算法[J]. 电视技术, 2020, 44(11): 12–18. doi: 10.16280/j.videoe.2020.11.04.YU Ming, XU Xinyi, SHI Shuo, et al. Depression recognition algorithm based on facial deep spatio-temporal features[J]. Video Engineering, 2020, 44(11): 12–18. doi: 10.16280/j.videoe.2020.11.04. [24] HE Lang, CHAN J C W, and WANG Zhongmin. Automatic depression recognition using CNN with attention mechanism from videos[J]. Neurocomputing, 2021, 422: 165–175. doi: 10.1016/j.neucom.2020.10.015. [25] NIU Mingyue, TAO Jianhua, and LIU Bin. Multi-scale and multi-region facial discriminative representation for automatic depression level prediction[C]. The ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing, Toronto, Canada, 2021: 1325–1329. doi: 10.1109/ICASSP39728.2021.9413504. [26] ZHENG Yajing, WU Xiaohang, LIN Xiaoming, et al. The prevalence of depression and depressive symptoms among eye disease patients: A systematic review and meta-analysis[J]. Scientific Reports, 2017, 7(1): 46453. doi: 10.1038/srep46453. [27] BALTRUSAITIS T, ZADEH A, LIM Y C, et al. OpenFace 2.0: Facial behavior analysis toolkit[C]. 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018). Xi’an, China, 2018: 59–66. doi: 10.1109/FG.2018.00019. [28] BULAT A and TZIMIROPOULOS G. How far are we from solving the 2D & 3D face alignment problem? (and a dataset of 230, 000 3D facial landmarks)[C]. The IEEE International Conference on Computer Vision, Venice, Italy, 2017: 1021–1030. doi: 10.1109/ICCV.2017.116. [29] HAN Kai, XIAO An, WU Enhua, et al. Transformer in transformer[C]. The 35th International Conference on Neural Information Processing Systems, 2021: 1217. [30] DOSOVITSKIY A, BEYER L, KOLESNIKOV A, et al. An image is worth 16x16 words: Transformers for image recognition at scale[C]. The 9th International Conference on Learning Representations, Austria, 2021. [31] DEROGATIS L R and CLEARY P A. Confirmation of the dimensional structure of the scl‐90: A study in construct validation[J]. Journal of Clinical Psychology, 1977, 33(4): 981–989. doi: 10.1002/1097-4679(197710)33:4<981::AID-JCLP2270330412>3.0.CO;2-0. [32] 美国精神病学协会, 张道龙, 等译. 精神障碍诊断和统计手册[M]. 5版. 北京: 北京大学出版社, 2015: 70–71.American Psychiatric Association, ZHANG Daolong, et al. translation. Diagnostic and Statistical Manual of Mental Disorders[M]. 5th ed. Beijing: Peking University Press, 2015: 70–71. [33] SHANG Yuanyuan, PAN Yuchen, JIANG Xiao, et al. LQGDNet: A local quaternion and global deep network for facial depression recognition[J]. IEEE Transactions on Affective Computing, 2023, 14(3): 2557–2563. doi: 10.1109/TAFFC.2021.3139651. [34] DE MELO W C, GRANGER E, and LÓPEZ M B. MDN: A deep maximization-differentiation network for spatio-temporal depression detection[J]. IEEE Transactions on Affective Computing, 2023, 14(1): 578–590. doi: 10.1109/TAFFC.2021.3072579. [35] ONYEMA E M, SHUKLA P K, DALAL S, et al. Enhancement of patient facial recognition through deep learning algorithm: ConvNet[J]. Journal of Healthcare Engineering, 2021, 2021(1): 5196000. doi: 10.1155/2021/5196000. [36] PAN Yuchen, SHANG Yuanyuan, SHAO Zhuhong, et al. Integrating deep facial priors into landmarks for privacy preserving multimodal depression recognition[J]. IEEE Transactions on Affective Computing, 2024, 15(3): 828–836. doi: 10.1109/TAFFC.2023.3296318. [37] CASADO C Á, CAÑELLAS M L, and LÓPEZ M B. Depression recognition using remote photoplethysmography from facial videos[J]. IEEE Transactions on Affective Computing, 2023, 14(4): 3305–3316. doi: 10.1109/TAFFC.2023.3238641. [38] ZHANG Shiqing, ZHANG Xingnan, ZHAO Xiaoming, et al. MTDAN: A lightweight multi-scale temporal difference attention networks for automated video depression detection[J]. IEEE Transactions on Affective Computing, 2024, 15(3): 1078–1089. doi: 10.1109/TAFFC.2023.3312263. [39] PAN Yuchen, SHANG Yuanyuan, LIU Tie, et al. Spatial–temporal attention network for depression recognition from facial videos[J]. Expert Systems with Applications, 2024, 237: 121410. doi: 10.1016/j.eswa.2023.121410. [40] DAI Ziqian, LI Qiuping, SHANG Yichen, et al. Depression detection based on facial expression, audio and gait[C]. 2023 IEEE 6th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 2023: 1568–1573. doi: 10.1109/ITNEC56291.2023.10082163. [41] XU Jiaqi, GUNES H, KUSUMAM K, et al. Two-stage temporal modelling framework for video-based depression recognition using graph representation[J]. IEEE Transactions on Affective Computing, 2025, 16(1): 161–178. doi: 10.1109/TAFFC.2024.3415770. -

下载:

下载:

下载:

下载: