Long-term Transformer and Adaptive Fourier Transform for Dynamic Graph Convolutional Traffic Flow Prediction Study

-

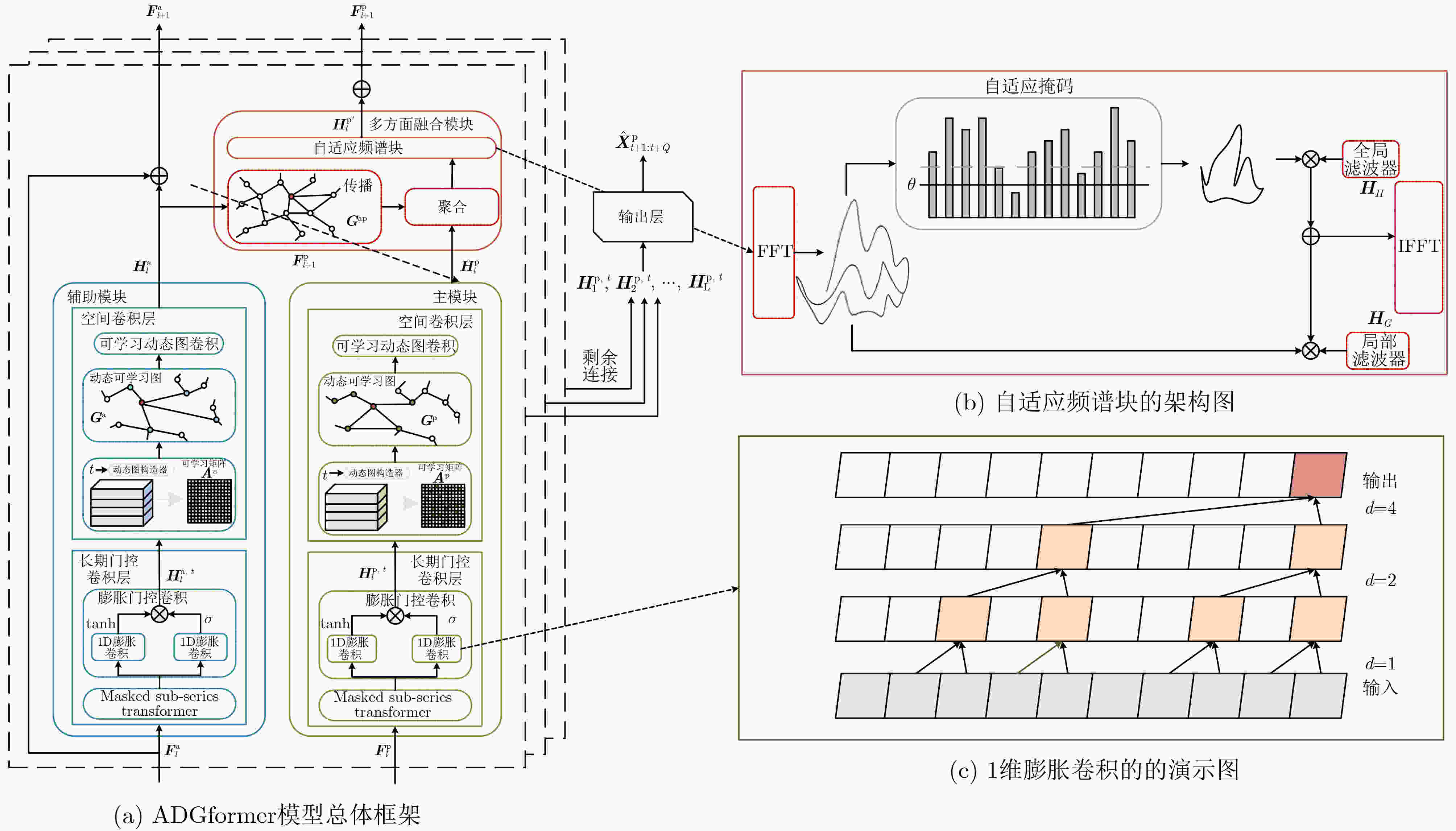

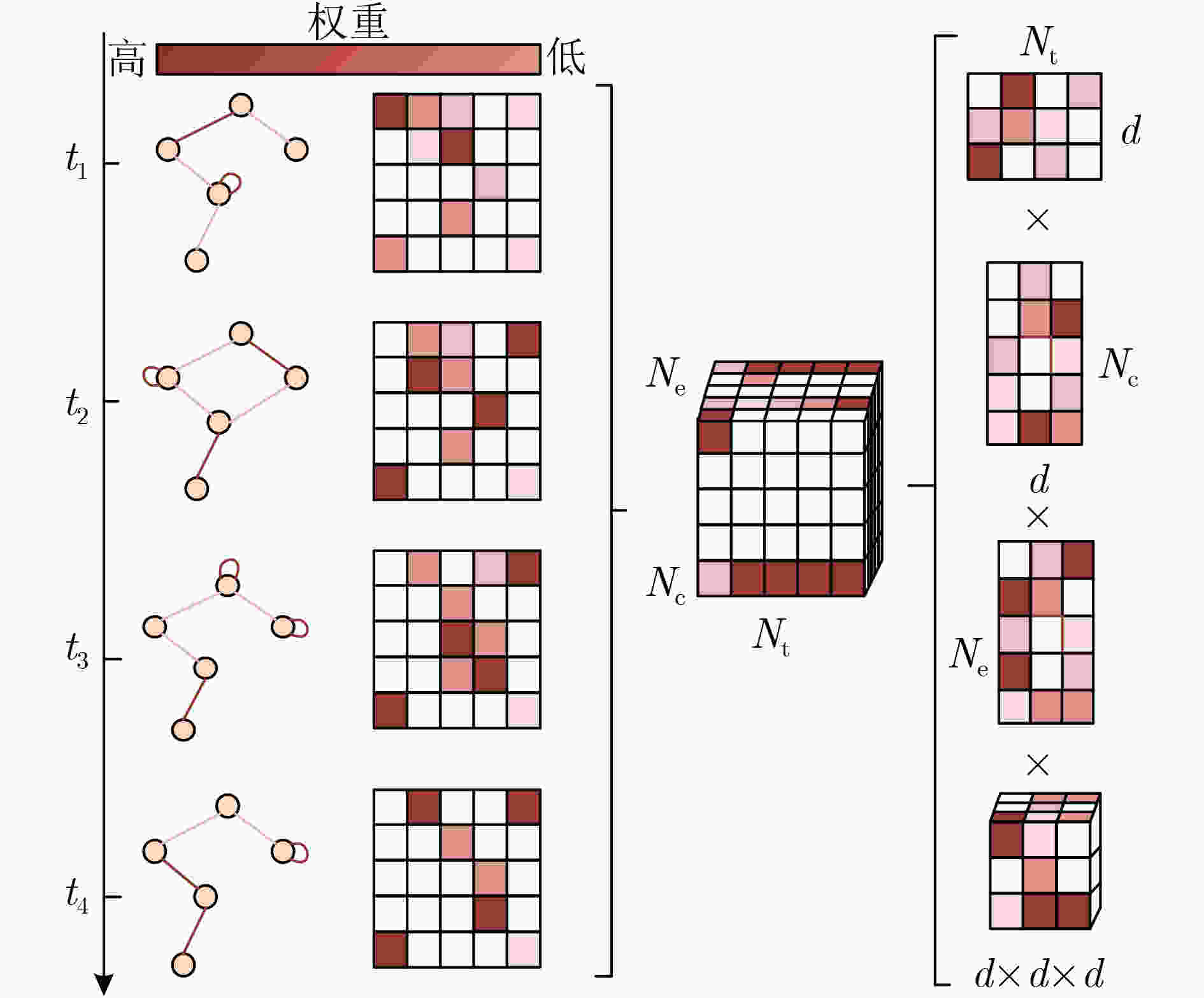

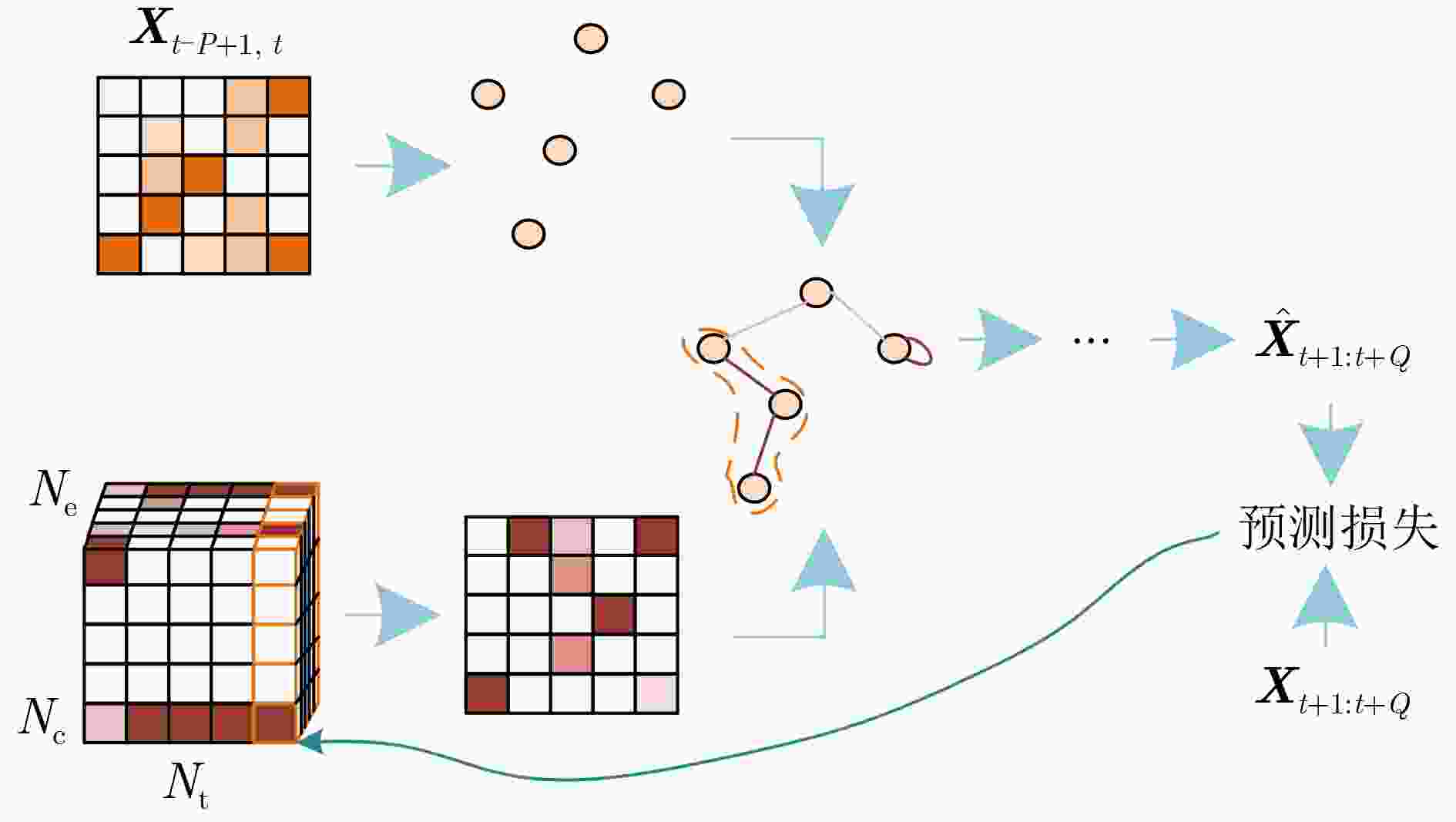

摘要: 针对交通流长期趋势性与非平稳性不易有效建模,以及交通流的隐藏动态时空特征难以捕捉的问题,该文提出一种基于长期Transformer和自适应傅里叶变换的动态图卷积交通流预测模型(ADGformer)。其中,长期门控卷积层通过掩码子序列Transformer从长历史序列中学习压缩的、上下文信息丰富的子序列表示,并利用膨胀门控卷积从子序列的时间表示中有效捕捉交通流的长期趋势特征。并设计一种动态图构造器生成动态可学习图,并利用可学习动态图卷积对节点间潜在的和时变的空间依赖关系进行建模以有效捕获交通流的动态隐藏空间特征。其次,自适应频谱块利用傅里叶变换来增强特征表示并捕获长短期的交互作用,同时通过自适应阈值处理来降低交通流的非平稳性。实验结果表明,所提ADGformer模型具有较好的预测性能。

-

关键词:

- 交通流预测 /

- 动态图卷积 /

- 长期Transformer /

- 傅里叶变换 /

- 非平稳性

Abstract:Objective The rapid development of Intelligent Transportation Systems (ITS), combined with population growth and increasing vehicle ownership, has intensified traffic congestion and road capacity limitations. Accurate and timely traffic flow prediction supports traffic control centers in road management and assists travelers in avoiding congestion and improving travel efficiency, thereby relieving traffic pressure and enhancing road network utilization. However, current models struggle to capture the complex, non-stationary, and long-term dependent characteristics of traffic systems, limiting prediction accuracy. To address these limitations, deep learning approaches—such as dynamic graph convolution and Transformer architectures—have gained prominence in traffic prediction. This study proposes a dynamic graph convolutional traffic flow prediction model (ADGformer) that integrates a long-term Transformer with an adaptive Fourier transform. The model is designed to more effectively capture long-term temporal dependencies, handle non-stationary patterns, and extract latent dynamic spatiotemporal features from traffic flow data. Methods The ADGformer model, based on a Long-Term Transformer and Adaptive Fourier Transform, comprises three primary components:a stacked Long-term Gated Convolutional (LGConv) layer, a spatial convolutional layer, and a Multifaceted Fusion Module. The LGConv layer integrates a Masked Sub-series Transformer and dilated gated convolution to extract long-term trend features from temporally segmented traffic flow series, thereby enhancing long-term prediction performance. The Masked Sub-series Transformer—comprising the Subsequent Temporal Representation Learner (STRL) and a self-supervised task head—learns compact and context-rich representations from extended time series sub-segments. The spatial convolutional layer incorporates a dynamic graph constructor that generates learnable graphs based on the hidden states of spatially related nodes. These dynamic graphs are then processed using learnable dynamic graph convolution to extract latent spatial features that evolve over time. To address the non-stationarity of traffic flow sequences, an adaptive spectral block based on Fourier transform is introduced. Results and Discussions The ADGformer model adaptively models inter-node relationships through a dynamic graph constructor, effectively capturing spatial dependencies within traffic networks and the evolving spatial characteristics of traffic flows. It also learns compressed, context-rich subsequence representations and long-term temporal patterns from extended input sequences. The adaptive spectral block, based on the Fourier transform, reduces the non-stationarity of traffic flow data. To evaluate model performance, this study conducts comparison and ablation experiments on three benchmark datasets. On the PEMSD4 dataset, the ADGformer model reduces MAE under Horizon 3 by approximately 21.57% and 16.72% compared with traditional models such as VAR and LR, respectively. Under Horizon 12 on the England dataset, ADGformer reduces RMSE by 3.35% and 2.44% compared with GWNet and MTGNN, respectively. ADGformer achieves the highest prediction accuracy across all three datasets ( Table 2 ). Visualization comparisons with XGBoost, ASTGCN, and DCRNN on PEMSD4 and England datasets further confirm its superior long-term predictive performance (Fig. 5 ). The model maintains robust performance as the prediction horizon increases. To assess the contributions of individual components, ablation experiments are performed for Horizons 3, 6, and 12. On Horizon 12 of the PEMSD4 dataset, ADGformer improves MAE by approximately 3.69%, 5.09%, 0.92%, and 7.59% relative to the variant models NMS-Trans, NLGConv, NASB, and NDGConv, respectively. On the England dataset, MAPE is reduced by 3.56%, 18.53%, 2.98%, and 4.87%, respectively (Table 3 ). Visual results of the ablation study (Fig. 6 ) show that ADGformer consistently outperforms its variants as the forecast step increases, confirming the effectiveness of each module in the proposed model.Conclusions To address the limitations of existing traffic flow prediction models—namely, (1) failure to capture hidden dynamic spatio-temporal correlations among road network nodes, (2) insufficient learning of key temporal information and long-term trend features from extended historical sequences, and (3) limited ability to reduce the non-stationarity of traffic data—this study proposes a combinatorial traffic flow prediction model, ADGformer, which enables accurate long-term traffic flow prediction. ADGformer employs a Masked Sub-series Transformer to pre-train long historical sequences and extract compact, information-rich subsequence representations. A gated mechanism combined with one-dimensional dilated convolution is then applied to capture long-term trend features and enhance temporal modeling capacity. The model also integrates a spatial convolutional layer constructed from dynamic traffic patterns. Through learnable dynamic graph convolution, it effectively captures evolving spatial dependencies across the network. Furthermore, an adaptive spectral block based on Fourier transform is designed to enhance feature representation and reduce non-stationarity by applying adaptive thresholding to suppress noise, while preserving both long- and short-term interactions. Given the complex and variable nature of traffic flow, future work will consider integrating additional features such as periodicity to further improve prediction performance. -

表 1 数据集详细信息

数据集 节点 边 时间步长(min) 时间跨度(月) PEMSD4 307 16 969 5 2 PEMSD8 170 17 833 5 2 England 314 17 353 15 6 表 2 各种模型在3个数据集上的预测结果

数据集 模型 Horizon 3 Horizon 6 Horizon 12 MAE RMSE MAPE MAE RMSE MAPE MAE RMSE MAPE PEMSD4 HA 3.567 9 6.778 7 0.080 3 3.568 9 6.779 7 0.080 4 3.570 7 6.781 5 0.080 4 VAR 1.662 4 3.0882 0.032 7 2.125 0 4.015 6 0.043 3 2.568 7 4.834 7 0.053 6 XGBoost 1.447 1 2.973 2 0.028 8 1.915 9 4.095 5 0.041 3 2.532 7 5.323 9 0.058 5 LR 1.565 5 3.138 4 0.030 9 2.075 8 4.291 8 0.043 9 2.795 1 5.597 8 0.062 7 ASTGCN 1.542 8 3.142 8 0.032 3 1.937 4 4.106 2 0.042 5 2.442 4 5.139 2 0.055 2 GMAN 1.376 0 2.980 3 0.027 9 1.621 3 3.790 6 0.034 8 1.865 0 4.479 1 0.041 6 DCRNN 1.429 5 2.880 5 0.028 1 1.853 1 3.873 1 0.039 1 2.366 6 4.899 1 0.052 7 MTGNN 1.348 5 2.851 7 0.026 8 1.645 4 3.752 3 0.034 8 1.930 6 4.497 2 0.042 6 GWNet 1.326 6 2.815 9 0.026 6 1.645 6 3.764 0 0.035 6 1.955 0 4.556 0 0.044 6 ADGformer 1.303 8 2.728 6 0.025 6 1.606 0 3.684 3 0.032 7 1.862 9 4.367 6 0.041 1 PEMSD8 HA 2.811 9 5.676 3 0.063 1 2.809 9 5.673 0 0.063 0 2.804 7 5.664 7 0.062 7 VAR 1.132 7 2.071 2 0.022 4 1.716 6 3.305 3 0.035 6 2.166 1 4.250 0 0.046 7 XGBoost 1.199 7 2.569 8 0.024 1 1.560 4 3.503 0 0.033 8 2.000 8 4.484 0 0.045 8 LR 1.271 1 2.633 5 0.024 4 1.661 4 3.560 6 0.033 5 2.168 1 4.579 4 0.045 9 ASTGCN 1.379 4 2.993 4 0.029 7 1.644 6 3.619 4 0.035 8 1.994 5 4.288 0 0.043 6 GMAN 1.137 4 2.675 2 0.023 4 1.323 7 3.395 0 0.029 2 1.512 0 4.0524 0.035 5 DCRNN 1.195 7 2.467 9 0.023 6 1.534 7 3.303 4 0.032 5 1.905 1 4.145 1 0.042 7 MTGNN 1.140 2 2.573 8 0.023 4 1.397 7 3.491 3 0.031 4 1.632 3 4.249 0 0.039 4 GWNet 1.118 4 0.023 3 2.553 3 1.384 9 3.532 7 0.031 9 1.604 3 4.242 4 0.039 1 ADGformer 1.046 9 2.451 6 0.019 1 1.318 5 3.282 0 0.028 5 1.505 8 4.051 4 0.035 3 England HA 7.047 3 12.213 3 0.099 3 7.044 1 12.210 6 0.099 3 7.034 1 12.200 5 0.099 2 VAR 3.213 5 5.725 4 0.041 9 4.101 9 7.576 4 0.056 3 4.837 2 8.948 7 0.069 3 XGBoost 3.192 4 6.717 7 0.044 0 4.298 8 8.667 3 0.063 1 5.523 7 10.475 6 0.082 9 LR 3.773 2 7.433 1 0.050 5 5.281 6 9.704 5 0.073 7 6.566 4 11.599 1 0.094 3 ASTGCN 3.214 1 6.540 4 0.043 9 3.926 2 7.917 7 0.055 6 4.601 0 9.079 3 0.066 4 GMAN 2.611 7 6.287 4 0.036 2 2.955 8 7.333 9 0.043 1 3.306 6 8.140 4 0.049 3 DCRNN 2.836 3 6.106 2 0.038 1 3.491 3 7.447 3 0.048 7 4.281 3 8.721 7 0.061 0 MTGNN 2.535 2 5.978 9 0.035 4 2.921 6 7.088 9 0.043 0 3.335 9 7.993 6 0.050 7 GWNet 2.521 6 5.961 0 0.034 8 2.961 0 7.128 4 0.043 6 3.446 1 8.068 7 0.051 3 ADGformer 2.519 0 5.943 5 0.034 8 2.907 9 7.045 0 0.042 8 3.243 4 7.798 8 0.048 8 表 3 ADGformer模型与变体模型在不同时间步长的预测性能

数据集 模型 Horizon 3 Horizon 6 Horizon 12 MAE RMSE MAPE MAE RMSE MAPE MAE RMSE MAPE PEMSD4 NMS-Trans 1.330 3 2.810 3 0.026 2 1.643 3 3.727 1 0.035 1 1.934 3 4.493 5 0.043 2 NLGConv 1.353 8 2.828 6 0.027 6 1.656 0 3.784 3 0.036 7 1.962 9 4.567 6 0.044 1 NASB 1.303 2 2.772 3 0.025 8 1.611 1 3.694 7 0.033 9 1.880 2 4.399 0 0.041 9 NDGConv 1.345 2 2.828 4 0.026 4 1.684 3 3.782 7 0.035 7 2.016 0 4.625 8 0.045 1 ADGformer 1.303 8 2.728 6 0.025 6 1.606 0 3.684 3 0.032 7 1.862 9 4.367 6 0.041 1 PEMSD8 NMS-Trans 1.103 9 2.483 2 0.021 9 1.360 0 3.332 8 0.029 1 1.607 1 4.076 5 0.036 3 NLGConv 1.111 4 2.500 5 0.022 2 1.389 6 3.447 0 0.030 4 1.666 7 4.263 7 0.038 5 NASB 1.121 8 2.566 9 0.022 9 1.398 2 3.533 4 0.031 0 1.631 3 4.232 8 0.037 9 NDGConv 1.149 5 2.563 2 0.023 9 1.442 5 3.497 0 0.031 9 1.724 6 4.263 1 0.039 7 ADGformer 1.046 9 2.451 6 0.019 1 1.318 5 3.282 0 0.028 5 1.505 8 4.051 4 0.035 3 England NMS-Trans 2.528 2 5.994 9 0.035 3 2.889 0 7.109 5 0.043 3 3.288 5 7.900 6 0.050 6 NLGConv 2.513 2 6.271 1 0.037 3 2.988 9 7.178 7 0.044 2 3.297 7 7.993 7 0.059 9 NASB 2.524 7 5.984 7 0.035 3 2.911 9 7.092 5 0.044 3 3.267 8 7.917 2 0.050 3 NDGConv 2.555 7 6.088 4 0.036 2 2.957 3 7.258 3 0.044 2 3.339 8 7.997 9 0.051 3 ADGformer 2.519 0 5.943 5 0.034 8 2.907 9 7.045 0 0.042 8 3.243 4 7.798 8 0.048 8 -

[1] CINI N and AYDIN Z. A deep ensemble approach for long-term traffic flow prediction[J]. Arabian Journal for Science and Engineering, 2024, 49(9): 12377–12392. doi: 10.1007/s13369-023-08672-1. [2] SATTARZADEH A R, KUTADINATA R J, PATHIRANA P N, et al. A novel hybrid deep learning model with ARIMA Conv-LSTM networks and shuffle attention layer for short-term traffic flow prediction[J]. Transportmetrica A: Transport Science, 2025, 21(1): 2236724. doi: 10.1080/23249935.2023.2236724. [3] CHENG Zeyang, LU Jian, ZHOU Huajian, et al. Short-term traffic flow prediction: An integrated method of econometrics and hybrid deep learning[J]. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(6): 5231–5244. doi: 10.1109/TITS.2021.3052796. [4] HE Silu, LUO Qinyao, DU Ronghua, et al. STGC-GNNs: A GNN-based traffic prediction framework with a spatial–temporal Granger causality graph[J]. Physica A: Statistical Mechanics and its Applications, 2023, 623: 128913. doi: 10.1016/j.physa.2023.128913. [5] ZHU Hailong, XIE Yawen, HE Wei, et al. A novel traffic flow forecasting method based on RNN‐GCN and BRB[J]. Journal of Advanced Transportation, 2020, 2020: 1–11. doi: 10.1155/2020/7586154. [6] SUN Lijun, LIU Mingzhi, LIU Guanfeng, et al. FD-TGCN: Fast and dynamic temporal graph convolution network for traffic flow prediction[J]. Information Fusion, 2024, 106: 102291. doi: 10.1016/j.inffus.2024.102291. [7] LIU Yutian, FENG Tao, RASOULI S, et al. ST-DAGCN: A spatiotemporal dual adaptive graph convolutional network model for traffic prediction[J]. Neurocomputing, 2024, 601: 128175. doi: 10.1016/j.neucom.2024.128175. [8] BING Qichun, ZHAO Panpan, REN Canzheng, et al. Short-Term traffic flow forecasting method based on secondary decomposition and conventional neural network–transformer[J]. Sustainability, 2024, 16(11): 4567. doi: 10.3390/su16114567. [9] WANG Runjie, SHI Wenzhong, LIU Xianglei, et al. An adaptive cutoff frequency selection approach for fast fourier transform method and its application into short-term traffic flow forecasting[J]. ISPRS International Journal of Geo-Information, 2020, 9(12): 731. doi: 10.3390/ijgi9120731. [10] LI He, LI Xuejiao, SU Liangcai, et al. Deep spatio-temporal adaptive 3D convolutional neural networks for traffic flow prediction[J]. ACM Transactions on Intelligent Systems and Technology (TIST), 2022, 13(2): 1–21. doi: 10.1145/3510829. [11] CHEN Jian, WANG Wei, YU Keping, et al. Node connection strength matrix-based graph convolution network for traffic flow prediction[J]. IEEE Transactions on Vehicular Technology, 2023, 72(9): 12063–12074. doi: 10.1109/TVT.2023.3265300. [12] ZHANG Hong, CHEN Linlong, CAO Jie, et al. Traffic flow forecasting of graph convolutional network based on spatio-temporal attention mechanism[J]. International Journal of Automotive Technology, 2023, 24(4): 1013–1023. doi: 10.1007/s12239-023-0083-9. [13] LING Jiahao, LAN Yuanchun, HUANG Xiaohui, et al. A multi-scale residual graph convolution network with hierarchical attention for predicting traffic flow in urban mobility[J]. Complex & Intelligent Systems, 2024, 10(3): 3305–3317. doi: 10.1007/s40747-023-01324-9. [14] WANG Xinqiang, SHANG Yihui, and LI Guoyan. DTM-GCN: A traffic flow prediction model based on dynamic graph convolutional network[J]. Multimedia Tools and Applications, 2024, 83(41): 89545–89561. doi: 10.1007/s11042-024-18348-z. [15] LI Zuhua, WEI Siwei, WANG Haibo, et al. ADDGCN: A novel approach with down-sampling dynamic graph convolution and multi-head attention for traffic flow forecasting[J]. Applied Sciences, 2024, 14(10): 4130. doi: 10.3390/app14104130. [16] 邓涵优, 陈红梅, 肖清, 等. 基于多头注意力动态图卷积网络的交通流预测[J]. 太原理工大学学报, 2024, 55(1): 172–183. doi: 10.16355/j.tyut.1007-9432.2023BD004.DENG Hanyou, CHEN Hongmei, XIAO Qing, et al. Dynamic graph convolution network with multi-head attention for traffic flow prediction[J]. Journal of Taiyuan University of Technology, 2024, 55(1): 172–183. doi: 10.16355/j.tyut.1007-9432.2023BD004. [17] PENG Hao, DU Bowen, and LIU Mingsheng, et al. Dynamic graph convolutional network for long-term traffic flow prediction with reinforcement learning[J]. Information Sciences, 2021, 578: 401–416. doi: 1016/j.ins.2021.07.007. doi: 10.1016/j.ins.2021.07.007. [18] 唐晨嘉, 曾伟, 赵振兴. 基于自适应动态图卷积循环网络的交通流预测[J]. 武汉理工大学学报(交通科学与工程版), 2024, 48(3): 415–420. doi: 10.3963/j.issn.2095-3844.2024.03.002.TANG Chenjia, ZENG Wei, and ZHAO Zhenxing. Traffic flow prediction based on adaptive dynamic graph convolutional recurrent network[J]. Journal of Wuhan University of Technology (Transportation Science & Engineering), 2024, 48(3): 415–420. doi: 10.3963/j.issn.2095-3844.2024.03.002. [19] 蒋挺, 杨柳, 刘亚林, 等. 基于分解动态时空分解框架预测交通流量[J]. 科学技术与工程, 2025, 25(7): 3007–3017. doi: 10.12404/j.issn.1671-1815.2307832.JIANG Ting, YANG Liu, LIU Yalin, et al. Traffic flow prediction based on the dynamic spatial-temporal decomposition framework[J]. Science Technology and Engineering, 2025, 25(7): 3007–3017. doi: 10.12404/j.issn.1671-1815.2307832. [20] WEI Siwei, YANG Yang, LIU Donghua, et al. Transformer-Based spatiotemporal graph diffusion convolution network for traffic flow forecasting[J]. Electronics, 2024, 13(16): 3151. doi: 10.3390/electronics13163151. [21] YANG Hanqing, WEI Sen, and WANG Yuanqing. STFEformer: Spatial–Temporal fusion embedding transformer for traffic flow prediction[J]. Applied Sciences, 2024, 14(10): 4325. doi: 10.3390/app14104325. [22] ZHU Changfeng, YU Chunxiao, and HUO Jiuyuan. Research on spatio-temporal network prediction model of parallel–series traffic flow based on transformer and GCAT[J]. Physica A: Statistical Mechanics and its Applications, 2023, 610: 128414. doi: 10.1016/j.physa.2022.128414. [23] REN Qianqian, LI Yang, and LIU Yong. Transformer-enhanced periodic temporal convolution network for long short-term traffic flow forecasting[J]. Expert Systems with Applications, 2023, 227: 120203. doi: 10.1016/j.eswa.2023.120203. [24] ZHANG Chen, WU Yue, SHEN Ya, et al. Adaptive graph convolutional recurrent network with transformer and whale optimization algorithm for traffic flow prediction[J]. Mathematics, 2024, 12(10): 1493. doi: 10.3390/math12101493. [25] ZANG Linlin, WANG Tao, ZHANG Bo, et al. Transfer learning-based nonstationary traffic flow prediction using AdaRNN and DCORAL[J]. Expert Systems with Applications, 2024, 258: 125143. doi: 10.1016/j.eswa.2024.125143. [26] YIN Lisheng, LIU Pan, WU Yangyang, et al. ST-VGBiGRU: A hybrid model for traffic flow prediction with spatio-temporal multimodality[J]. IEEE Access, 2023, 11: 54968–54985. doi: 10.1109/ACCESS.2023.3282323. [27] HUANG Xiaoting, MA Changxi, ZHAO Yongpeng, et al. A hybrid model of neural network with VMD–CNN–GRU for traffic flow prediction[J]. International Journal of Modern Physics C, 2023, 34(12): 2350159. doi: 10.1142/S0129183123501590. [28] SUN Haoran, WEI Yanling, HUANG Xueliang, et al. Global spatio‐temporal dynamic capturing network‐based traffic flow prediction[J]. IET Intelligent Transport Systems, 2023, 17(6): 1220–1228. doi: 10.1049/itr2.12371. [29] LI Yaguang and SHAHABI C. A brief overview of machine learning methods for short-term traffic forecasting and future directions[J]. SIGSPATIAL Special, 2018, 10(1): 3–9. doi: 10.1145/3231541.3231544. [30] CHEN Tianqi and GUESTRIN C. XGBoost: A scalable tree boosting system[C]. The 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, USA, 2016: 785–794. doi: 10.1145/2939672.2939785. [31] LAI Qiang and CHEN Peng. LEISN: A long explicit–implicit spatio-temporal network for traffic flow forecasting[J]. Expert Systems with Applications, 2024, 245: 123139. doi: 10.1016/j.eswa.2024.123139. [32] GUO Shengnan, LIN Youfang, FENG Ning, et al. Attention based spatial-temporal graph convolutional networks for traffic flow forecasting[C]. Proceedings of the 9th AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, USA, 2019: 922–929. doi: 10.1609/aaai.v33i01.3301922. [33] ZHENG Chuanpan, FAN Xiaoliang, WANG Cheng, et al. GMAN: A graph multi-attention network for traffic prediction[C]. The 34th AAAI Conference on Artificial Intelligence, New York, USA, 2020: 1234–1241. doi: 10.1609/aaai.v34i01.5477. [34] LI Yaguang, YU R, SHAHABI C, et al. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting[C]. The 6th International Conference on Learning Representations, Vancouver, Canada, 2018: 1–16. doi: 10.48550/arXiv.1707.01926. [35] WU Zonghan, PAN Shirui, LONG Guodong, et al. Connecting the dots: Multivariate time series forecasting with graph neural networks[C]. The 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, California, USA, 2020: 753–763. doi: 10.1145/3394486.3403118. [36] TIAN Chenyu and CHAN W K. Spatial-temporal attention wavenet: A deep learning framework for traffic prediction considering spatial-temporal dependencies[J]. IET Intelligent Transport Systems, 2021, 15(4): 549–561. doi: 10.1049/itr2.12044. -

下载:

下载:

下载:

下载: