Light Field Angular Reconstruction Based on Template Alignment and Multi-stage Feature Learning

-

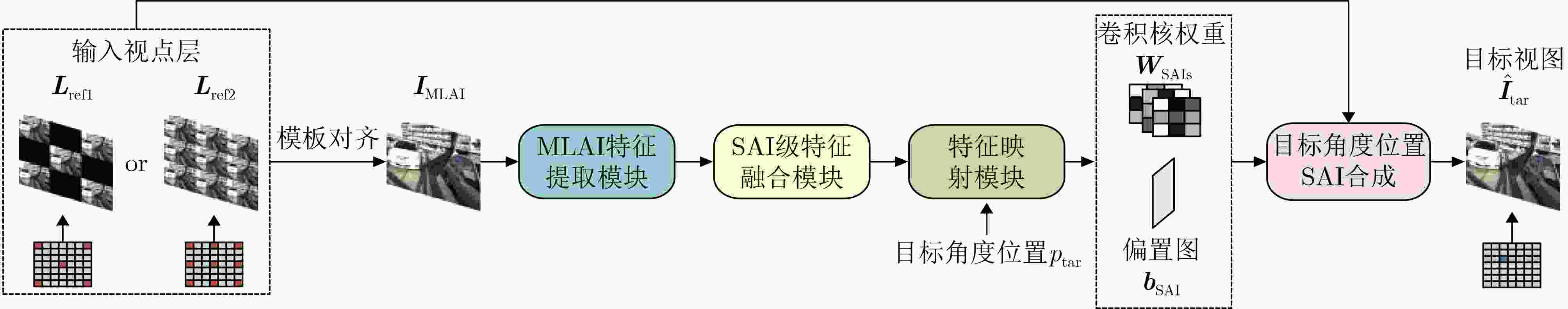

摘要: 现有光场图像角度重建方法通过探索光场图像内在的空间-角度信息以进行角度重建,但无法同时处理不同视点层的子孔径图像重建任务,难以满足光场图像可伸缩编码的需求。为此,将视点层视为稀疏模板,该文提出一种能够单模型处理不同角度稀疏模板的光场图像角度重建方法。将不同的角度稀疏模板视为微透镜阵列图像的不同表示,通过模板对齐将输入的不同视点层整合为微透镜阵列图像,采用多阶段特征学习方式,以微透镜阵列级-子孔径级的特征学习策略来处理不同输入的稀疏模板,并辅以独特的训练模式,以稳定地参考不同角度稀疏模板,重建任意角度位置的子孔径图像。实验结果表明,所提方法能有效地参考不同稀疏模板,灵活地重建任意角度位置的子孔径图像,且所提模板对齐与训练方法能有效地应用于其它光场图像超分辨率重建方法以提升其处理不同角度稀疏模板的能力。Abstract:

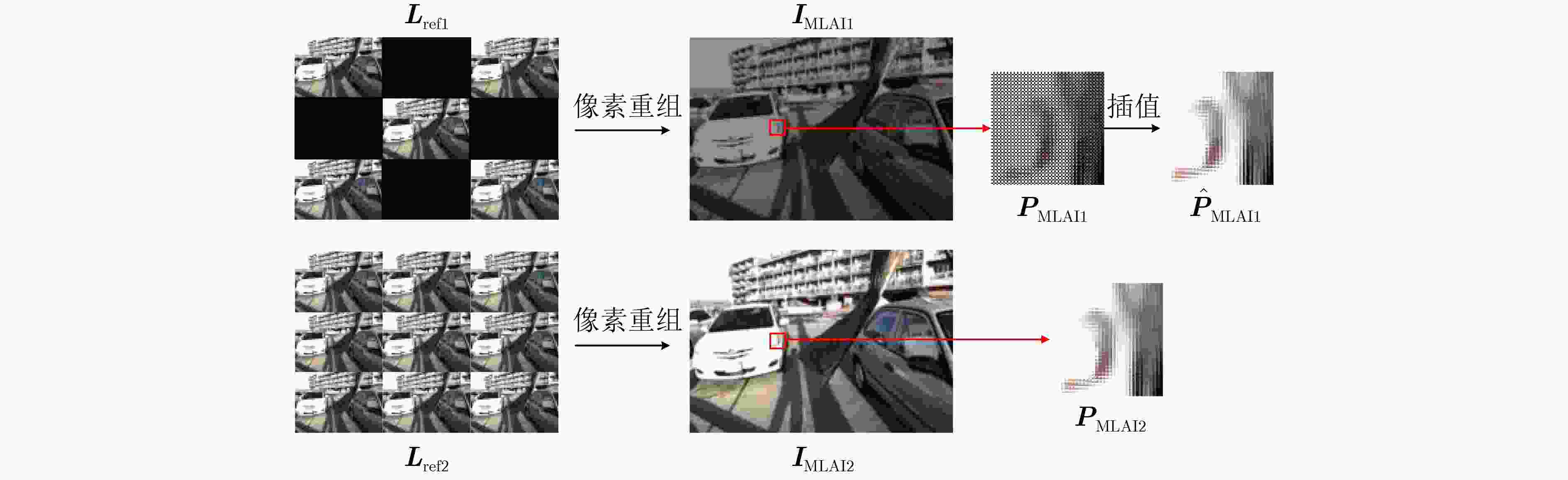

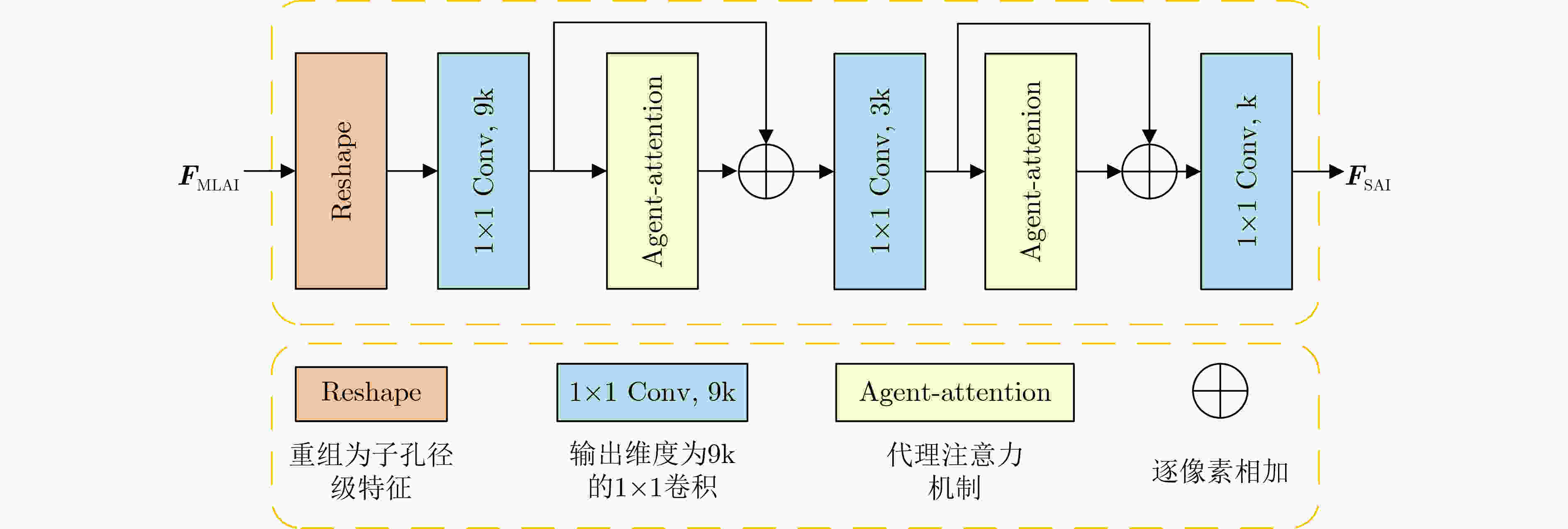

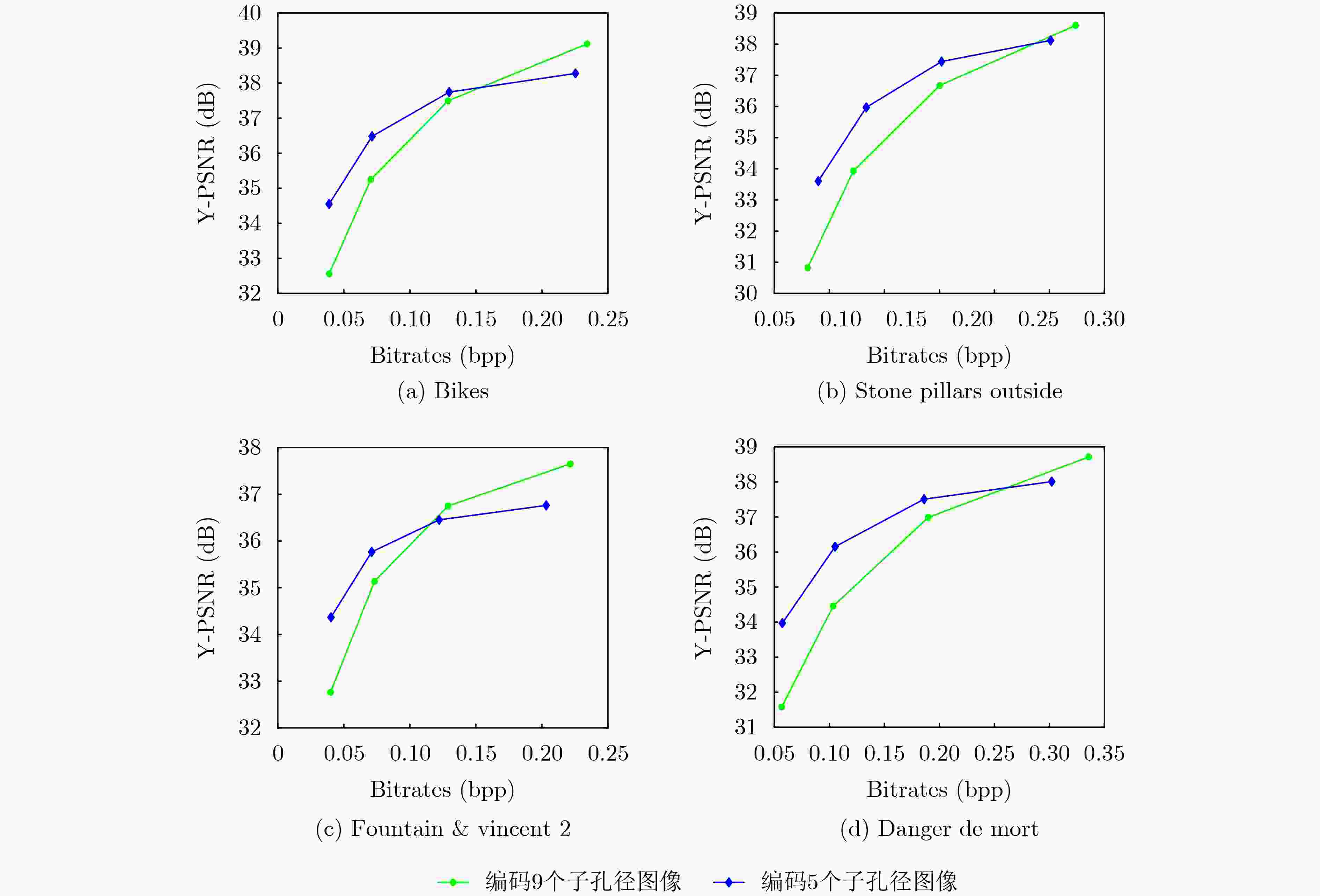

Objective By placing a micro-lens array between the main lens and imaging sensor, a light field camera captures both intensity and directional information of light in a scene. However, due to sensor size, dense spatial sampling results in sparse angular sampling during light field imaging. Consequently, angular super-resolution reconstruction of Light Field Images (LFIs) is essential. Existing deep learning-based LFI angular super-resolution reconstruction typically achieves dense LFIs through two approaches. The direct generation approach models the correlation between spatial and angular information from sparse LFIs and then upsamples along the angular dimension to reconstruct the light field. The indirect approach, on the other hand, generates intermediate outputs, reconstructing LFIs through operations on these outputs and the inputs. LFI coding methods based on sparse sampling generally select partial Sub Aperture Images (SAIs) for compression and transmission, using angular super-resolution to reconstruct the LFI at the decoder. In LFI scalable coding, the SAIs are divided into multiple viewpoint layers, some of which are selectively transmitted based on bit rate allocation, while the remaining layers are reconstructed at the decoder. Although existing deep learning-based angular super-resolution methods yield promising results, they lack flexibility and generalizability across different numbers and positions of reference SAIs. This limits their ability to reconstruct SAIs from arbitrary viewpoints, making them unsuitable for LFI scalable coding. To address this, a Light Field Angular Reconstruction method based on Template Alignment and multi-stage Feature learning (LFAR-TAF) is proposed, capable of handling different angular sparse templates with a single network model. Methods The process involves alignment, Micro-Lens Array Image (MLAI) feature extraction, sub-aperture level feature fusion, feature mapping to the target angular position, and SAI synthesis at the target angular position. First, the different viewpoint layers used in LFI scalable encoding are treated as different representations of the MLAI, referred to as light field sparse templates. To minimize discrepancies between these sparse templates and reduce the complexity of fitting the single network model, bilinear interpolation is employed to align the templates and generate corresponding MLAIs. The MLAI Feature Learning (MLAIFL) module then uses a Residual Dense Block (RDB) to extract preliminary features from the MLAIs, thereby mitigating the differences introduced by bilinear interpolation. Since the MLAI feature extraction process may partially disrupt the angular consistency of the LFI, a conversion mechanism from MLAI features to sub-aperture features is devised, incorporating an SAI-level Feature fusion (SAIF) module. In this step, the input MLAI features are reorganized along the channel dimension to align with the SAI dimension. Three 1×1 convolutions and two agent attention mechanisms are then employed for progressive fusion, supported by residual connections to accelerate convergence. In the feature mapping module, the extracted SAI features are mapped and adjusted to the target angular position based on the given target angular coordinates. Specifically, the SAI features are expanded in dimension using a recombination operator to match the spatial dimensions of the input features, and are concatenated with the input features. The concatenated target angular information is then fused with the light field features using a 1×1 convolution and RDB. The fused features are subsequently input into two RDBs to generate intermediate convolution kernel weights and bias maps. In the SAI synthesis module for the target angular position, the common viewpoints of different sparse templates serve as reference SAIs for the indirect synthesis method, ensuring the stability of the proposed approach. Using non-shared weight convolution kernels of the same dimension, the reference SAIs are convolved, and the preliminary results are combined with the generated bias map to synthesize the target SAI with enhanced detail. Results and Discussions The performance of LFAR-TAF is evaluated using two publicly available natural scene LFI datasets: the STFLytro dataset and Kalantari et al.’s dataset. To ensure non-overlapping training and testing sets, the same partitioning method as in current advanced approaches for natural scene LFIs is adopted. Specifically, 100 natural scene LFIs (100 Scenes) from Kalantari et al.’s dataset are used for training, while the test set consists of 30 LFIs (30 Scenes) from the Kalantari et al.’s dataset, as well as 15 Reflective scenes and 25 occlusion scenes from the STFLytro dataset. LFAR-TAF is compared with six angular super-resolution reconstruction methods (ShearedEPI, Yeung et al.’s method, LFASR-geo, FS-GAF, DistgASR, and IRVAE) using PSNR and SSIM as objective quality metrics for angular reconstruction from 3×3 to 7×7. Experimental results demonstrate that LFAR-TAF achieves the highest objective quality scores across all three test datasets. Notably, the proposed method is capable of reconstructing SAIs at any viewpoint using either five reference SAIs or 3×3 reference SAIs, after training on angular reconstruction tasks from 3×3 to 7×7. Subjective visual comparisons further show that LFAR-TAF effectively restores color and texture details of the target SAI from the reference SAIs. Ablation experiments reveal that removing either the MLAIFL or SAIF module results in decreased objective quality scores on the three test datasets, with the loss being more pronounced when the MLAIFL module is omitted. This highlights the importance of MLAI feature learning in modeling the spatial and angular correlations of LFIs, while the SAIF module enhances the micro-lens array to sub-aperture feature conversion process. Additionally, coding experiments are conducted to assess the practical performance of the proposed method in LFI coding. Two angular sparse templates (five SAIs and 3×3 SAIs) are tested on four scenarios from the EPFL dataset. The results show that encoding five SAIs achieves high coding efficiency at lower bit rates, while encoding nine SAIs from the 3×3 sparse template provides better performance at higher bit rates. These findings suggest that, to improve LFI scalable coding compression efficiency, different sparse templates can be selected based on the bit rate, and LFAR-TAF demonstrates stable reconstruction capabilities for various sparse templates in a single training process. Conclusions The proposed LFAR-TAF effectively handles different sparse templates with a single network model, enabling the flexible reconstruction of SAIs at any viewpoint by referencing SAIs with varying numbers and positions. This flexibility is particularly beneficial for LFI scalable coding. Moreover, the designed training approach can be applied to other LFI angular super-resolution methods, enhancing their ability to handle diverse sparse templates. -

Key words:

- Light Field Image(LFI) /

- Angular reconstruction /

- Scalable coding /

- Sparse template

-

表 1 实验所用训练和测试集划分

表 2 不同光场角度超分辨率重建方法在3×3→7×7重建任务上的定量比较

方法 30 Scenes Occlusion Reflective PSNR(dB) SSIM PSNR(dB) SSIM PSNR(dB) SSIM ShearedEPI[13] 42.74 0.986 7 39.84 0.981 9 40.32 0.964 7 Yeung et al.[8] 44.53 0.990 0 42.06 0.987 0 42.56 0.971 1 LFASR-geo[14] 44.16 0.988 9 41.71 0.986 6 42.04 0.969 3 FS-GAF[15] 44.32 0.989 0 41.94 0.987 0 42.62 0.970 6 DistgASR[11] 45.90 0.996 8 43.88 0.996 0 43.95 0.988 7 IRVAE[16] 45.64 0.996 7 43.62 0.995 8 42.48 0.988 1 LFAR-TAF 46.07 0.997 0 44.06 0.996 2 43.95 0.989 5 表 3 所提模板对齐和训练策略在不同角度重建方法上的验证

方法 重建任务 30 Scenes Occlusion Reflective PSNR(dB) SSIM PSNR(dB) SSIM PSNR(dB) SSIM DistgASR[11] 角度

5→7×744.70 0.995 9 41.84 0.994 2 42.07 0.984 9 IRVAE[16] 44.62 0.995 9 41.87 0.994 3 41.86 0.985 0 LFAR-TAF 45.08 0.996 0 42.53 0.994 9 42.24 0.984 1 DistgASR[11] 角度

3×3→7×745.81 0.996 7 43.73 0.995 9 43.81 0.988 3 IRVAE[16] 45.36 0.996 5 43.07 0.995 6 42.90 0.987 4 LFAR-TAF 46.07 0.997 0 44.06 0.996 2 43.95 0.989 5 表 4 所提方法各核心模块的消融实验结果

方法 30 Scenes Occlusion Reflective PSNR(dB) SSIM PSNR(dB) SSIM PSNR(dB) SSIM w/o MLAIFL 45.31 0.995 8 43.78 0.996 0 43.28 0.988 3 w/o SAIF 45.88 0.996 8 43.78 0.996 0 43.88 0.989 5 LFAR-TAF 46.07 0.997 0 44.06 0.996 2 43.95 0.989 5 -

[1] CHEN Yeyao, JIANG Gangyi, YU Mei, et al. HDR light field imaging of dynamic scenes: A learning-based method and a benchmark dataset[J]. Pattern Recognition, 2024, 150: 110313. doi: 10.1016/j.patcog.2024.110313. [2] ZHAO Chun and JEON B. Compact representation of light field data for refocusing and focal stack reconstruction using depth adaptive multi-CNN[J]. IEEE Transactions on Computational Imaging, 2024, 10: 170–180. doi: 10.1109/TCI.2023.3347910. [3] ATTAL B, HUANG Jiabin, ZOLLHOFER M, et al. Learning neural light fields with ray-space embedding[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, USA, 2022: 19787–19797. doi: 10.1109/CVPR52688.2022.01920. [4] HUR J, LEE J Y, CHOI J, et al. I see-through you: A framework for removing foreground occlusion in both sparse and dense light field images[C]. 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, USA, 2023: 229–238. doi: 10.1109/WACV56688.2023.00031. [5] 邓慧萍, 曹召洋, 向森, 等. 基于上下文感知跨层特征融合的光场图像显著性检测[J]. 电子与信息学报, 2023, 45(12): 4489–4498. doi: 10.11999/JEIT221270.DENG Huiping, CAO Zhaoyang, XIANG Sen, et al. Saliency detection based on context-aware cross-layer feature fusion for light field images[J]. Journal of Electronics & Information Technology, 2023, 45(12): 4489–4498. doi: 10.11999/JEIT221270. [6] 武迎春, 王玉梅, 王安红, 等. 基于边缘增强引导滤波的光场全聚焦图像融合[J]. 电子与信息学报, 2020, 42(9): 2293–2301. doi: 10.11999/JEIT190723.WU Yingchun, WANG Yumei, WANG Anhong, et al. Light field all-in-focus image fusion based on edge enhanced guided filtering[J]. Journal of Electronics & Information Technology, 2020, 42(9): 2293–2301. doi: 10.11999/JEIT190723. [7] YOON Y, JEON H G, YOO D, et al. Learning a deep convolutional network for light-field image super-resolution[C]. 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 2015: 57–65. doi: 10.1109/ICCVW.2015.17. [8] YEUNG H W F, HOU Junhui, CHEN Jie, et al. Fast light field reconstruction with deep coarse-to-fine modeling of spatial-angular clues[C]. 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 2018: 138–154. doi: 10.1007/978-3-030-01231-1_9. [9] WU Gaochang, ZHAO Mandan, WANG Liangyong, et al. Light field reconstruction using deep convolutional network on EPI[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, USA, 2017: 1638–1646. doi: 10.1109/CVPR.2017.178. [10] WANG Yunlong, LIU Fei, WANG Zilei, et al. End-to-end view synthesis for light field imaging with pseudo 4DCNN[C]. 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 2018, pp. 340–355. doi: 10.1007/978-3-030-01216-8_21. [11] WANG Yingqian, WANG Longguang, WU Gaochang, et al. Disentangling light fields for super-resolution and disparity estimation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(1): 425–443. doi: 10.1109/TPAMI.2022.3152488. [12] KALANTARI N K, WANG Tingchun, and RAMAMOORTHI R. Learning-based view synthesis for light field cameras[J]. ACM Transactions on Graphics, 2016, 35(6): 193. doi: 10.1145/2980179.2980251. [13] WU Gaochang, LIU Yebin, DAI Qionghai, et al. Learning sheared EPI structure for light field reconstruction[J]. IEEE Transactions on Image Processing, 2019, 28(7): 3261–3273. doi: 10.1109/TIP.2019.2895463. [14] JIN Jing, HOU Junhui, YUAN Hui, et al. Learning light field angular super-resolution via a geometry-aware network[C]. 2020 34th AAAI Conference on Artificial Intelligence, New York, USA, 2020: 11141–11148. doi: 10.1609/aaai.v34i07.6771. [15] JIN Jing, HOU Junhui, CHEN Jie, et al. Deep coarse-to-fine dense light field reconstruction with flexible sampling and geometry-aware fusion[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(4): 1819–1836. doi: 10.1109/TPAMI.2020.3026039. [16] HAN Kang and XIANG Wei. Inference-reconstruction variational autoencoder for light field image reconstruction[J]. IEEE Transactions on Image Processing, 2022, 31: 5629–5644. doi: 10.1109/TIP.2022.3197976. [17] ÇETINKAYA E, AMIRPOUR H, and TIMMERER C. LFC-SASR: Light field coding using spatial and angular super-resolution[C]. 2022 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Taipei City, China, 2022: 1–6. doi: 10.1109/ICMEW56448.2022.9859373. [18] AMIRPOUR H, TIMMERER C, and GHANBARI M. SLFC: Scalable light field coding[C]. 2021 Data Compression Conference (DCC), Snowbird, USA, 2021: 43–52. doi: 10.1109/DCC50243.2021.00012. [19] ZHANG Yulun, TIAN Yapeng, KONG Yu, et al. Residual dense network for image super-resolution[C] 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, USA, 2018: 2472–2481. doi: 10.1109/CVPR.2018.00262. [20] HAN Dongchen, YE Tianzhu, HAN Yizeng, et al. Agent attention: On the integration of softmax and linear attention[C]. 18th European Conference on Computer Vision, Milan, Italy, 2024: 124–140. doi: 10.1007/978-3-031-72973-7_8. [21] RAJ A S, LOWNEY M, SHAH R, et al. Stanford Lytro light field archive[EB/OL]. http://lightfields.stanford.edu/LF2016.html, 2016. [22] RERABEK M and EBRAHIMI T. New light field image dataset[C]. 8th International Conference on Quality of Multimedia Experience, Lisbon, Portugal, 2016. -

下载:

下载:

下载:

下载: